Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure AI Search can extract and index both text and images from PDF documents stored in Azure Blob Storage. This tutorial shows you how to build a multimodal indexing pipeline in Azure AI Search that chunks data using the built-in Text Split skill and uses multimodal embeddings to vectorize text and images from the same document. Cropped images are stored in a knowledge store, and both text and visual content are vectorized and ingested in a searchable index.

In this tutorial, you use:

A 36-page PDF document that combines rich visual content, such as charts, infographics, and scanned pages, with traditional text.

An indexer and skillset to create an indexing pipeline that includes AI enrichment through skills.

The Document Extraction skill for extracting normalized images and text. The Text Split skill chunks the data.

The Azure AI Vision multimodal embeddings skill to vectorize text and images.

A search index configured to store extracted text and image content. Some content is vectorized for vector-based similarity search.

This tutorial demonstrates a lower-cost approach for indexing multimodal content using the Document Extraction skill. It enables extraction and search over both text and images from documents pulled from Azure Blob Storage. However, it doesn't include locational metadata for text, such as page numbers or bounding regions. For a more comprehensive solution that includes structured text layout and spatial metadata, see Tutorial: Vectorize from a structured document layout.

Note

Image extraction by the Document Extraction skill isn't free. Setting imageAction to generateNormalizedImages in the skillset triggers image extraction, which is an extra charge. For billing information, see Azure AI Search pricing.

Prerequisites

Azure AI services multi-service account. This account provides access to the Azure AI Vision multimodal embedding model used in this tutorial. You must use an Azure AI multi-service account for skillset access to this resource.

Azure AI Search. Configure your search service for role-based access control and a managed identity for connections to Azure Storage and Azure AI Vision. Your service must be on the Basic tier or higher. This tutorial isn't supported on the Free tier.

Azure Storage, used for storing sample data and for creating a knowledge store.

Visual Studio Code with a REST client.

Limitations

- The Azure AI Vision multimodal embeddings skill has limited regional availability. When you install the multi-service account, choose a region that provides multimodal embeddings. For an updated list of regions that provide multimodal embeddings, see the Azure AI Vision documentation.

Prepare data

The following instructions apply to Azure Storage which provides the sample data and also hosts the knowledge store. A search service identity needs read access to Azure Storage to retrieve the sample data, and it needs write access to create the knowledge store. The search service creates the container for cropped images during skillset processing, using the name you provide in an environment variable.

Download the following sample PDF: sustainable-ai-pdf

In Azure Storage, create a new container named sustainable-ai-pdf.

Create role assignments and specify a managed identity in a connection string:

Assign Storage Blob Data Reader for data retrieval by the indexer. Assign Storage Blob Data Contributor and Storage Table Data Contributor to create and load the knowledge store. You can use either a system-assigned managed identity or a user-assigned managed identity for your search service role assignment.

For connections made using a system-assigned managed identity, get a connection string that contains a ResourceId, with no account key or password. The ResourceId must include the subscription ID of the storage account, the resource group of the storage account, and the storage account name. The connection string is similar to the following example:

"credentials" : { "connectionString" : "ResourceId=/subscriptions/00000000-0000-0000-0000-00000000/resourceGroups/MY-DEMO-RESOURCE-GROUP/providers/Microsoft.Storage/storageAccounts/MY-DEMO-STORAGE-ACCOUNT/;" }For connections made using a user-assigned managed identity, get a connection string that contains a ResourceId, with no account key or password. The ResourceId must include the subscription ID of the storage account, the resource group of the storage account, and the storage account name. Provide an identity using the syntax shown in the following example. Set userAssignedIdentity to the user-assigned managed identity. The connection string is similar to the following example:

"credentials" : { "connectionString" : "ResourceId=/subscriptions/00000000-0000-0000-0000-00000000/resourceGroups/MY-DEMO-RESOURCE-GROUP/providers/Microsoft.Storage/storageAccounts/MY-DEMO-STORAGE-ACCOUNT/;" }, "identity" : { "@odata.type": "#Microsoft.Azure.Search.DataUserAssignedIdentity", "userAssignedIdentity" : "/subscriptions/00000000-0000-0000-0000-00000000/resourcegroups/MY-DEMO-RESOURCE-GROUP/providers/Microsoft.ManagedIdentity/userAssignedIdentities/MY-DEMO-USER-MANAGED-IDENTITY" }

Prepare models

This tutorial assumes you have an existing Azure AI multiservice account through which the skill calls the Azure AI Vision multimodal 4.0 embedding model. The search service connects to the model during skillset processing using its managed identity. This section gives you guidance and links for assigning roles for authorized access.

Sign in to the Azure portal (not the Foundry portal) and find the Azure AI multiservice account. Make sure it's in a region that provides the multimodal 4.0 API.

Select Access control (IAM).

Select Add and then Add role assignment.

Search for Cognitive Services User and then select it.

Choose Managed identity and then assign your search service managed identity.

Set up your REST file

For this tutorial, your local REST client connection to Azure AI Search requires an endpoint and an API key. You can get these values from the Azure portal. For alternative connection methods, see Connect to a search service.

For authenticated connections that occur during indexer and skillset processing, the search service uses the role assignments you previously defined.

Start Visual Studio Code and create a new file.

Provide values for variables used in the request. For

@storageConnection, make sure your connection string doesn't have a trailing semicolon or quotation marks. For@imageProjectionContainer, provide a container name that's unique in blob storage. Azure AI Search creates this container for you during skills processing.@searchUrl = PUT-YOUR-SEARCH-SERVICE-ENDPOINT-HERE @searchApiKey = PUT-YOUR-ADMIN-API-KEY-HERE @storageConnection = PUT-YOUR-STORAGE-CONNECTION-STRING-HERE @cognitiveServicesUrl = PUT-YOUR-AZURE-AI-MULTI-SERVICE-ENDPOINT-HERE @modelVersion = 2023-04-15 @imageProjectionContainer=sustainable-ai-pdf-imagesSave the file using a

.restor.httpfile extension. For help with the REST client, see Quickstart: Full-text search using REST.

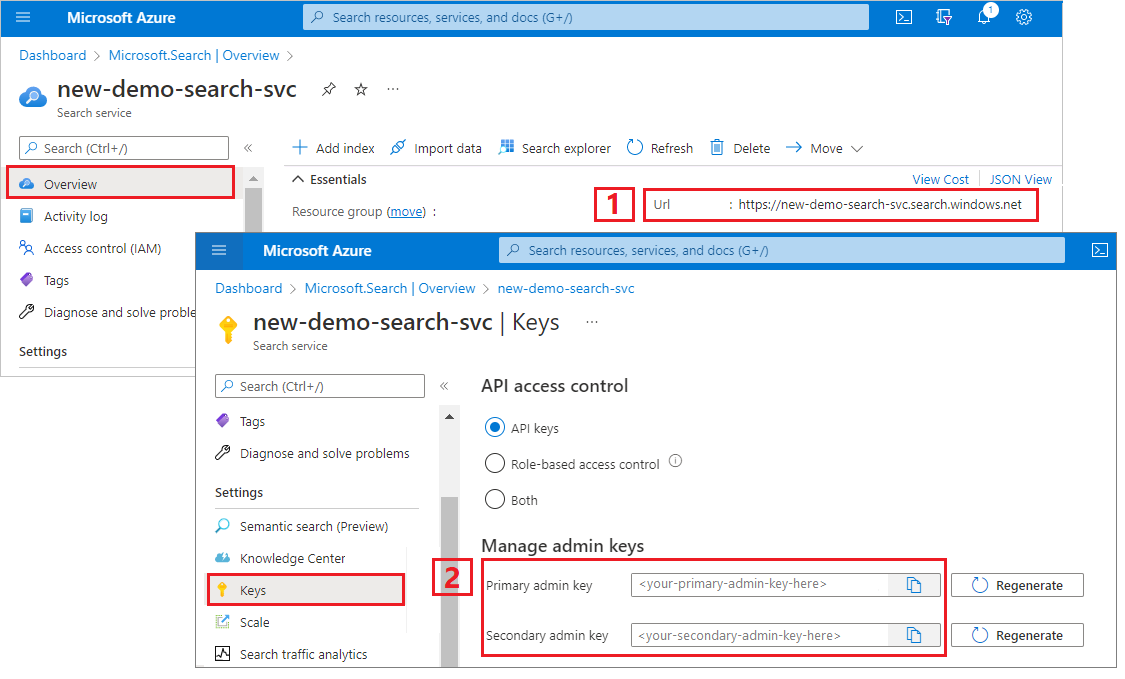

To get the Azure AI Search endpoint and API key:

Sign in to the Azure portal, navigate to the search service Overview page, and copy the URL. An example endpoint might look like

https://mydemo.search.windows.net.Under Settings > Keys, copy an admin key. Admin keys are used to add, modify, and delete objects. There are two interchangeable admin keys. Copy either one.

Create a data source

Create Data Source (REST) creates a data source connection that specifies what data to index.

POST {{searchUrl}}/datasources?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"name":"doc-extraction-multimodal-embedding-ds",

"description":null,

"type":"azureblob",

"subtype":null,

"credentials":{

"connectionString":"{{storageConnection}}"

},

"container":{

"name":"sustainable-ai-pdf",

"query":null

},

"dataChangeDetectionPolicy":null,

"dataDeletionDetectionPolicy":null,

"encryptionKey":null,

"identity":null

}

Send the request. The response should look like:

HTTP/1.1 201 Created

Transfer-Encoding: chunked

Content-Type: application/json; odata.metadata=minimal; odata.streaming=true; charset=utf-8

Location: https://<YOUR-SEARCH-SERVICE-NAME>.search.windows-int.net:443/datasources('doc-extraction-multimodal-embedding-ds')?api-version=2025-05-01-preview -Preview

Server: Microsoft-IIS/10.0

Strict-Transport-Security: max-age=2592000, max-age=15724800; includeSubDomains

Preference-Applied: odata.include-annotations="*"

OData-Version: 4.0

request-id: 4eb8bcc3-27b5-44af-834e-295ed078e8ed

elapsed-time: 346

Date: Sat, 26 Apr 2025 21:25:24 GMT

Connection: close

{

"name": "doc-extraction-multimodal-embedding-ds",

"description": null,

"type": "azureblob",

"subtype": null,

"indexerPermissionOptions": [],

"credentials": {

"connectionString": null

},

"container": {

"name": "sustainable-ai-pdf",

"query": null

},

"dataChangeDetectionPolicy": null,

"dataDeletionDetectionPolicy": null,

"encryptionKey": null,

"identity": null

}

Create an index

Create Index (REST) creates a search index on your search service. An index specifies all the parameters and their attributes.

For nested JSON, the index fields must be identical to the source fields. Currently, Azure AI Search doesn't support field mappings to nested JSON, so field names and data types must match completely. The following index aligns to the JSON elements in the raw content.

### Create an index

POST {{searchUrl}}/indexes?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"name": "doc-extraction-multimodal-embedding-index",

"fields": [

{

"name": "content_id",

"type": "Edm.String",

"retrievable": true,

"key": true,

"analyzer": "keyword"

},

{

"name": "text_document_id",

"type": "Edm.String",

"searchable": false,

"filterable": true,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false

},

{

"name": "document_title",

"type": "Edm.String",

"searchable": true

},

{

"name": "image_document_id",

"type": "Edm.String",

"filterable": true,

"retrievable": true

},

{

"name": "content_text",

"type": "Edm.String",

"searchable": true,

"retrievable": true

},

{

"name": "content_embedding",

"type": "Collection(Edm.Single)",

"dimensions": 1024,

"searchable": true,

"retrievable": true,

"vectorSearchProfile": "hnsw"

},

{

"name": "content_path",

"type": "Edm.String",

"searchable": false,

"retrievable": true

},

{

"name": "offset",

"type": "Edm.String",

"searchable": false,

"retrievable": true

},

{

"name": "location_metadata",

"type": "Edm.ComplexType",

"fields": [

{

"name": "page_number",

"type": "Edm.Int32",

"searchable": false,

"retrievable": true

},

{

"name": "bounding_polygons",

"type": "Edm.String",

"searchable": false,

"retrievable": true,

"filterable": false,

"sortable": false,

"facetable": false

}

]

}

],

"vectorSearch": {

"profiles": [

{

"name": "hnsw",

"algorithm": "defaulthnsw",

"vectorizer": "demo-vectorizer"

}

],

"algorithms": [

{

"name": "defaulthnsw",

"kind": "hnsw",

"hnswParameters": {

"m": 4,

"efConstruction": 400,

"metric": "cosine"

}

}

],

"vectorizers": [

{

"name": "demo-vectorizer",

"kind": "aiServicesVision",

"aiServicesVisionParameters": {

"resourceUri": "{{cognitiveServicesUrl}}",

"authIdentity": null,

"modelVersion": "{{modelVersion}}"

}

}

]

},

"semantic": {

"defaultConfiguration": "semanticconfig",

"configurations": [

{

"name": "semanticconfig",

"prioritizedFields": {

"titleField": {

"fieldName": "document_title"

},

"prioritizedContentFields": [

],

"prioritizedKeywordsFields": []

}

}

]

}

}

Key points:

Text and image embeddings are stored in the

content_embeddingfield and must be configured with appropriate dimensions, such as 1024, and a vector search profile.location_metadatacaptures bounding polygon and page number metadata for each normalized image, enabling precise spatial search or UI overlays.location_metadataonly exists for images in this scenario. If you'd like to capture locational metadata for text as well, consider using Document Layout skill. An in-depth tutorial is linked at the bottom of the page.For more information on vector search, see Vectors in Azure AI Search.

For more information on semantic ranking, see Semantic ranking in Azure AI Search

Create a skillset

Create Skillset (REST) creates a skillset on your search service. A skillset defines the operations that chunk and embed content prior to indexing. This skillset uses the built-in Document Extraction skill to extract text and images. It uses Text Split skill to chunk large text. It uses Azure AI Vision multimodal embeddings skill to vectorize image and text content.

### Create a skillset

POST {{searchUrl}}/skillsets?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"name": "doc-extraction-multimodal-embedding-skillset",

"description": "A test skillset",

"skills": [

{

"@odata.type": "#Microsoft.Skills.Util.DocumentExtractionSkill",

"name": "document-extraction-skill",

"description": "Document extraction skill to extract text and images from documents",

"parsingMode": "default",

"dataToExtract": "contentAndMetadata",

"configuration": {

"imageAction": "generateNormalizedImages",

"normalizedImageMaxWidth": 2000,

"normalizedImageMaxHeight": 2000

},

"context": "/document",

"inputs": [

{

"name": "file_data",

"source": "/document/file_data"

}

],

"outputs": [

{

"name": "content",

"targetName": "extracted_content"

},

{

"name": "normalized_images",

"targetName": "normalized_images"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.SplitSkill",

"name": "split-skill",

"description": "Split skill to chunk documents",

"context": "/document",

"defaultLanguageCode": "en",

"textSplitMode": "pages",

"maximumPageLength": 2000,

"pageOverlapLength": 200,

"unit": "characters",

"inputs": [

{

"name": "text",

"source": "/document/extracted_content",

"inputs": []

}

],

"outputs": [

{

"name": "textItems",

"targetName": "pages"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Vision.VectorizeSkill",

"name": "text-embedding-skill",

"description": "Vision Vectorization skill for text",

"context": "/document/pages/*",

"modelVersion": "{{modelVersion}}",

"inputs": [

{

"name": "text",

"source": "/document/pages/*"

}

],

"outputs": [

{

"name": "vector",

"targetName": "text_vector"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Vision.VectorizeSkill",

"name": "image-embedding-skill",

"description": "Vision Vectorization skill for images",

"context": "/document/normalized_images/*",

"modelVersion": "{{modelVersion}}",

"inputs": [

{

"name": "image",

"source": "/document/normalized_images/*"

}

],

"outputs": [

{

"name": "vector",

"targetName": "image_vector"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Util.ShaperSkill",

"name": "shaper-skill",

"description": "Shaper skill to reshape the data to fit the index schema",

"context": "/document/normalized_images/*",

"inputs": [

{

"name": "normalized_images",

"source": "/document/normalized_images/*",

"inputs": []

},

{

"name": "imagePath",

"source": "='{{imageProjectionContainer}}/'+$(/document/normalized_images/*/imagePath)",

"inputs": []

},

{

"name": "dataUri",

"source": "='data:image/jpeg;base64,'+$(/document/normalized_images/*/data)",

"inputs": []

},

{

"name": "location_metadata",

"sourceContext": "/document/normalized_images/*",

"inputs": [

{

"name": "page_number",

"source": "/document/normalized_images/*/pageNumber"

},

{

"name": "bounding_polygons",

"source": "/document/normalized_images/*/boundingPolygon"

}

]

}

],

"outputs": [

{

"name": "output",

"targetName": "new_normalized_images"

}

]

}

],

"cognitiveServices": {

"@odata.type": "#Microsoft.Azure.Search.AIServicesByIdentity",

"subdomainUrl": "{{cognitiveServicesUrl}}",

"identity": null

},

"indexProjections": {

"selectors": [

{

"targetIndexName": "doc-extraction-multimodal-embedding-index",

"parentKeyFieldName": "text_document_id",

"sourceContext": "/document/pages/*",

"mappings": [

{

"name": "content_embedding",

"source": "/document/pages/*/text_vector"

},

{

"name": "content_text",

"source": "/document/pages/*"

},

{

"name": "document_title",

"source": "/document/document_title"

}

]

},

{

"targetIndexName": "doc-extraction-multimodal-embedding-index",

"parentKeyFieldName": "image_document_id",

"sourceContext": "/document/normalized_images/*",

"mappings": [

{

"name": "content_embedding",

"source": "/document/normalized_images/*/image_vector"

},

{

"name": "content_path",

"source": "/document/normalized_images/*/new_normalized_images/imagePath"

},

{

"name": "location_metadata",

"source": "/document/normalized_images/*/new_normalized_images/location_metadata"

},

{

"name": "document_title",

"source": "/document/document_title"

}

]

}

],

"parameters": {

"projectionMode": "skipIndexingParentDocuments"

}

},

"knowledgeStore": {

"storageConnectionString": "{{storageConnection}}",

"identity": null,

"projections": [

{

"files": [

{

"storageContainer": "{{imageProjectionContainer}}",

"source": "/document/normalized_images/*"

}

]

}

]

}

}

This skillset extracts text and images, vectorizes both, and shapes the image metadata for projection into the index.

Key points:

The

content_textfield is populated with text extracted using the Document Extraction Skill and chunked using the Split Skillcontent_pathcontains the relative path to the image file within the designated image projection container. This field is generated only for images extracted from PDFs whenimageActionis set togenerateNormalizedImages, and can be mapped from the enriched document from the source field/document/normalized_images/*/imagePath.The Azure AI Vision multimodal embeddings skill enables embedding of both textual and visual data using the same skill type, differentiated by input (text vs image). For more information, see Azure AI Vision multimodal embeddings skill.

Create and run an indexer

Create Indexer creates an indexer on your search service. An indexer connects to the data source, loads data, runs a skillset, and indexes the enriched data.

### Create and run an indexer

POST {{searchUrl}}/indexers?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"name": "doc-extraction-multimodal-embedding-indexer",

"dataSourceName": "doc-extraction-multimodal-embedding-ds",

"targetIndexName": "doc-extraction-multimodal-embedding-index",

"skillsetName": "doc-extraction-multimodal-embedding-skillset",

"parameters": {

"maxFailedItems": -1,

"maxFailedItemsPerBatch": 0,

"batchSize": 1,

"configuration": {

"allowSkillsetToReadFileData": true

}

},

"fieldMappings": [

{

"sourceFieldName": "metadata_storage_name",

"targetFieldName": "document_title"

}

],

"outputFieldMappings": []

}

Run queries

You can start searching as soon as the first document is loaded.

### Query the index

POST {{searchUrl}}/indexes/doc-extraction-multimodal-embedding-index/docs/search?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"search": "*",

"count": true

}

Send the request. This is an unspecified full-text search query that returns all of the fields marked as retrievable in the index, along with a document count. The response should look like:

HTTP/1.1 200 OK

Transfer-Encoding: chunked

Content-Type: application/json; odata.metadata=minimal; odata.streaming=true; charset=utf-8

Content-Encoding: gzip

Vary: Accept-Encoding

Server: Microsoft-IIS/10.0

Strict-Transport-Security: max-age=2592000, max-age=15724800; includeSubDomains

Preference-Applied: odata.include-annotations="*"

OData-Version: 4.0

request-id: 712ca003-9493-40f8-a15e-cf719734a805

elapsed-time: 198

Date: Wed, 30 Apr 2025 23:20:53 GMT

Connection: close

{

"@odata.count": 100,

"@search.nextPageParameters": {

"search": "*",

"count": true,

"skip": 50

},

"value": [

],

"@odata.nextLink": "https://<YOUR-SEARCH-SERVICE-NAME>.search.windows.net/indexes/doc-extraction-multimodal-embedding-index/docs/search?api-version=2025-05-01-preview "

}

100 documents are returned in the response.

For filters, you can also use Logical operators (and, or, not) and comparison operators (eq, ne, gt, lt, ge, le). String comparisons are case-sensitive. For more information and examples, see Examples of simple search queries.

Note

The $filter parameter only works on fields that were marked filterable during index creation.

### Query for only images

POST {{searchUrl}}/indexes/doc-extraction-multimodal-embedding-index/docs/search?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"search": "*",

"count": true,

"filter": "image_document_id ne null"

}

### Query for text or images with content related to energy, returning the id, parent document, and text (only populated for text chunks), and the content path where the image is saved in the knowledge store (only populated for images)

POST {{searchUrl}}/indexes/doc-extraction-multimodal-embedding-index/docs/search?api-version=2025-05-01-preview HTTP/1.1

Content-Type: application/json

api-key: {{searchApiKey}}

{

"search": "energy",

"count": true,

"select": "content_id, document_title, content_text, content_path"

}

Reset and rerun

Indexers can be reset to clear the high-water mark, which allows a full rerun. The following POST requests are for reset, followed by rerun.

### Reset the indexer

POST {{searchUrl}}/indexers/doc-extraction-multimodal-embedding-indexer/reset?api-version=2025-05-01-preview HTTP/1.1

api-key: {{searchApiKey}}

### Run the indexer

POST {{searchUrl}}/indexers/doc-extraction-multimodal-embedding-indexer/run?api-version=2025-05-01-preview HTTP/1.1

api-key: {{searchApiKey}}

### Check indexer status

GET {{searchUrl}}/indexers/doc-extraction-multimodal-embedding-indexer/status?api-version=2025-05-01-preview HTTP/1.1

api-key: {{searchApiKey}}

Clean up resources

When you're working in your own subscription, at the end of a project, it's a good idea to remove the resources that you no longer need. Resources left running can cost you money. You can delete resources individually or delete the resource group to delete the entire set of resources.

You can use the Azure portal to delete indexes, indexers, and data sources.

See also

Now that you're familiar with a sample implementation of a multimodal indexing scenario, check out: