Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

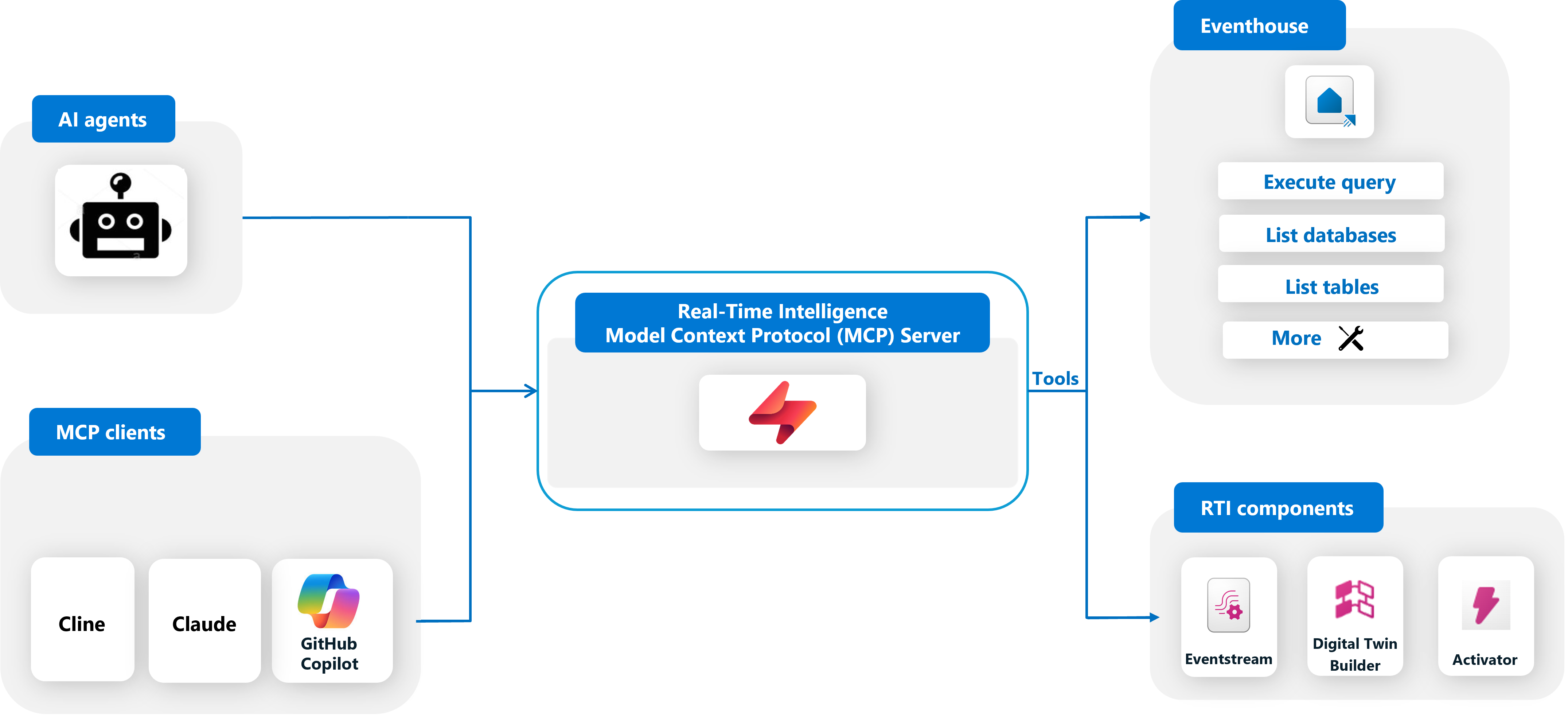

Integrating Model Context Protocol (MCP) with Real-Time Intelligence (RTI) lets you get AI-driven insights and actions in real time. The MCP server lets AI agents or AI applications interact with Fabric RTI or Azure Data Explorer (ADX) by providing tools through the MCP interface, so you can query and analyze data easily.

MCP support for RTI and ADX is a full open-source MCP server implementation for Microsoft Fabric Real-Time Intelligence (RTI).

Important

This feature is in preview.

Introduction to the Model Context Protocol (MCP)

Model Context Protocol (MCP) is a protocol that lets AI models, like Azure OpenAI models, interact with external tools and resources. MCP makes it easier for agents to find, connect to, and use enterprise data.

Scenarios

The most common scenario for using the RTI MCP Server is to connect to it from an existing AI client, such as Cline, Claude, and GitHub copilot. The client can then use all the available tools to access and interact with RTI or ADX resources using natural language. For example, you could use GitHub Copilot agent mode with the RTI MCP Server to list KQL databases or ADX clusters or run natural language queries on RTI Eventhouses.

Architecture

The RTI MCP Server is at the core of the system and acts as a bridge between AI agents and data sources. Agents send requests to the MCP server, which translates them into Eventhouse queries.

This architecture lets you build modular, scalable, and secure intelligent applications that respond to real-time signals. MCP uses a client-server architecture, so AI applications can interact with external tools efficiently. The architecture includes the following components:

- MCP Host: The environment where the AI model (like GPT-4, Claude, or Gemini) runs.

- MCP Client: An intermediary service forwards the AI model's requests to MCP servers, like GitHub Copilot, Cline, or Claude Desktop.

- MCP Server: Lightweight applications exposing specific capabilities by natural language APIs, databases. For example, Fabric RTI MCP server can execute KQL queries for real-time data retrieval from KQL databases.

Key features

Real-Time Data Access: Retrieve data from KQL databases in seconds.

Natural Language Interfaces: Ask questions in plain English or other languages, and the system turns them into optimized queries (NL2KQL).

Schema Discovery: Discover schema and metadata, so you can learn data structures dynamically.

Plug-and-Play Integration: Connect MCP clients like GitHub Copilot, Claude, and Cline to RTI with minimal setup because of standardized APIs and discovery mechanisms.

Local Language Inference: Work with your data in your preferred language.

Supported RTI components

Eventhouse - Run KQL queries against the KQL databases in your Eventhouse backend. This unified interface lets AI agents query, reason, and act on real-time data.

Note

You can also use the Fabric RTI MCP Server to run KQL queries against the clusters in your Azure Data Explorer backend.