How custom engine agents work

Here, we discuss how a custom engine agent works behind the scenes. You learn about all the components of a custom engine agent and see how they fit together to create agents in Microsoft 365. This knowledge helps you decide whether a custom engine agent works for you.

Microsoft Teams bot

Custom engine agents are built as bots. Bots use the Microsoft Bot Framework, a cloud service that provides a communication layer that routes messages between your bot logic and connected channels.

A bot can be connected to many channels, such as Microsoft Teams, Slack, Facebook, web chat and others.

Custom engine agents can respond to users using natural language and return responses using plain text, markdown, or interactive cards, known as Adaptive Cards.

Where can a custom engine agent be used in Microsoft Teams?

Users can interact with custom engine agents across different chat experiences in Microsoft Teams. They can use agents in:

- One to one chat, a single threaded conversation in the same way you chat with other users.

- Group chats, Teams channels, and meetings, agents can be invoked using @ mentions in chat threads.

By using the chat experiences provided by Microsoft Teams, users don't have to leave the context of their work to use your agent.

What are proactive messages?

Custom engine agents support proactive messages. A proactive message is any message sent by a bot that isn't in response to a request from a user. This message can include content, such as:

- Welcome messages

- Notifications

- Scheduled messages

AI model and orchestrator

A custom engine agent uses the model and orchestrator that you provide. They don’t use the infrastructure provided by Microsoft 365 Copilot. You choose the model, orchestrator, and RAI controls suitable for the scenario and tasks you want to optimize for.

AI model

AI models are fundamental in natural language processing (NLP) tasks, enabling machines to understand, generate, and manipulate human language effectively.

AI models come in two main types:

- Foundational models such as GPT-4, are pretrained on extensive datasets and can perform a wide range of tasks out of the box. They're versatile and can be fine-tuned for specific applications, making them a cost-effective choice for many developers.

- Custom models are tailored for specific tasks like sentiment analysis or medical diagnosis, offering potentially higher accuracy for specialized applications.

You can choose to host these models on various platforms, such as cloud services like Azure AI Foundry, or OpenAI, which offer robust infrastructure and scalability.

Orchestrator

In an agent, typically you want to use an orchestrator to connect your code to AI models and manage the interactions between components, ensuring coherent operation.

Key features of an orchestrator typically include:

- Prompting to guide the AI model's responses, ensuring contextual relevance.

- Responsible AI (RAI) to ensure that responses aren't harmful.

- Workflow management to determine task sequences and to invoke the right models or - services.

- Integration connects various AI capabilities and data sources.

- Resource management to optimize computational power.

- Error handling to maintain stability.

- Monitoring and logging to provide performance insights.

These features control and optimize the agent's behavior, ensuring effective operation and accurate, context-aware responses.

Custom engine agent user experience

Developers can enhance the user experience in Microsoft Teams by implementing extra platform features in a custom engine agent.

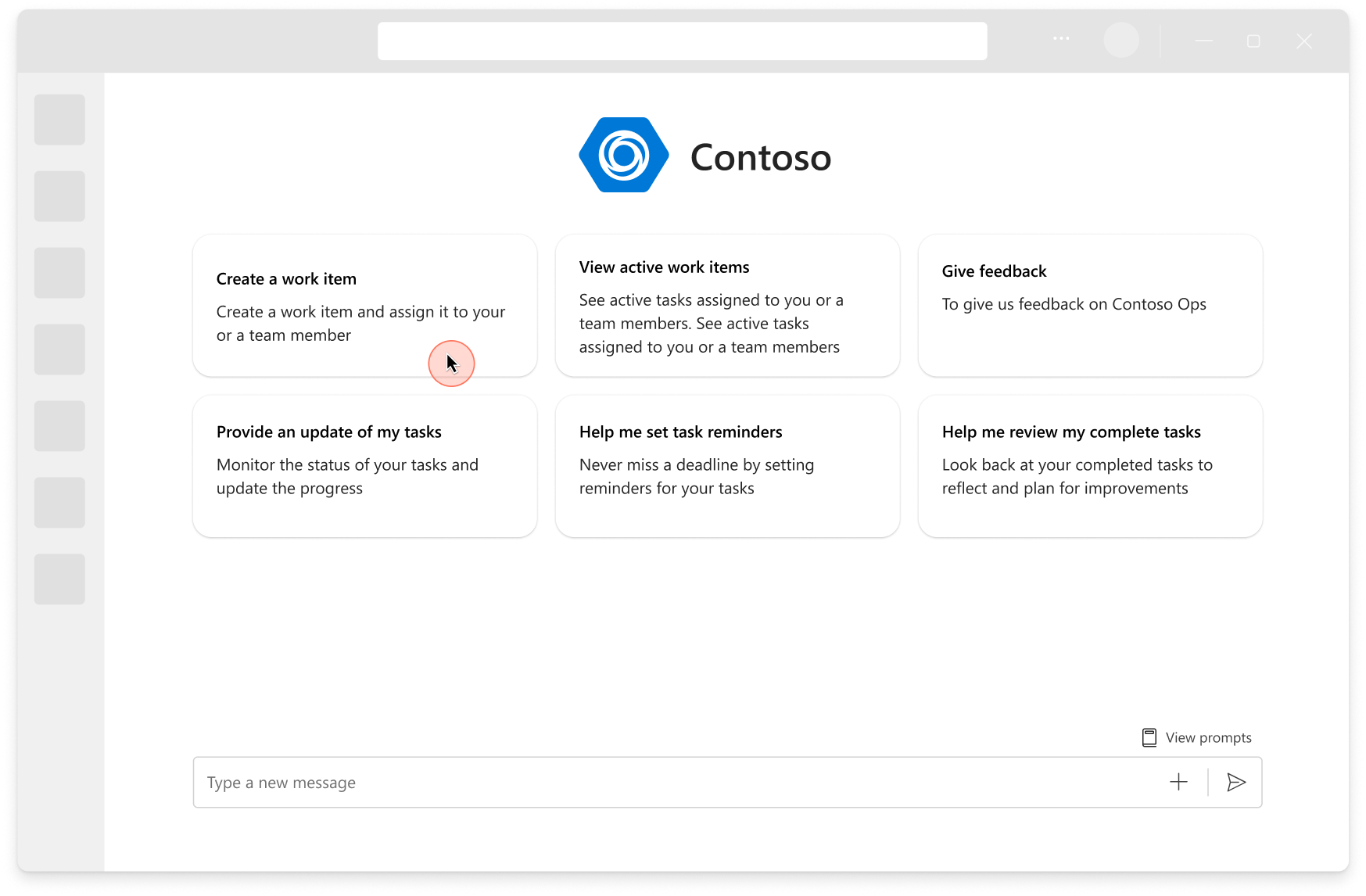

Prompt starters

Prompt starters, also known as conversation starters, provide predefined prompts in your agent to help users initiate interactions.

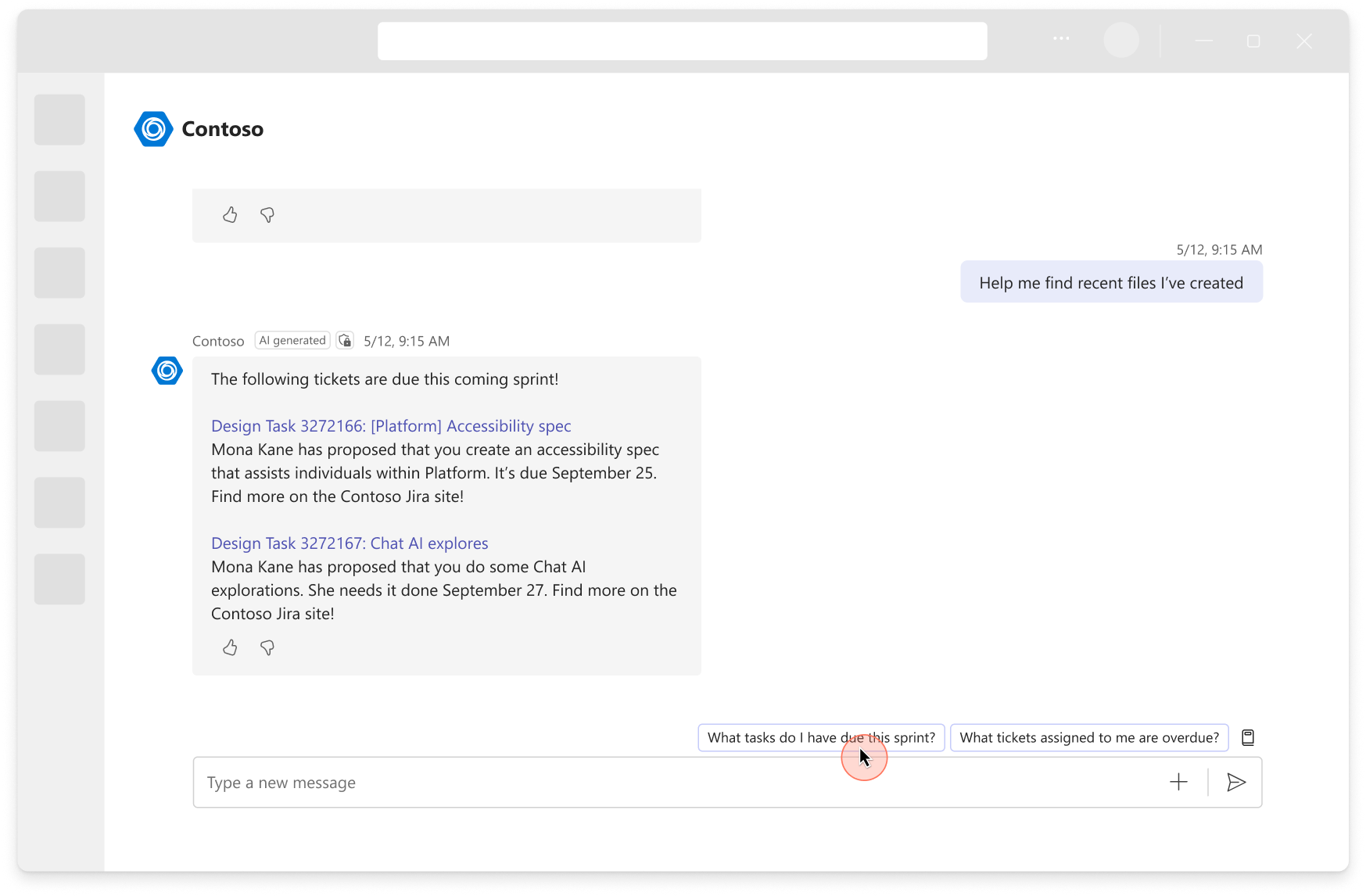

Suggested prompts

Suggested prompts guide users to the next best actions based on the conversation context, user profiles, and organizational preferences.

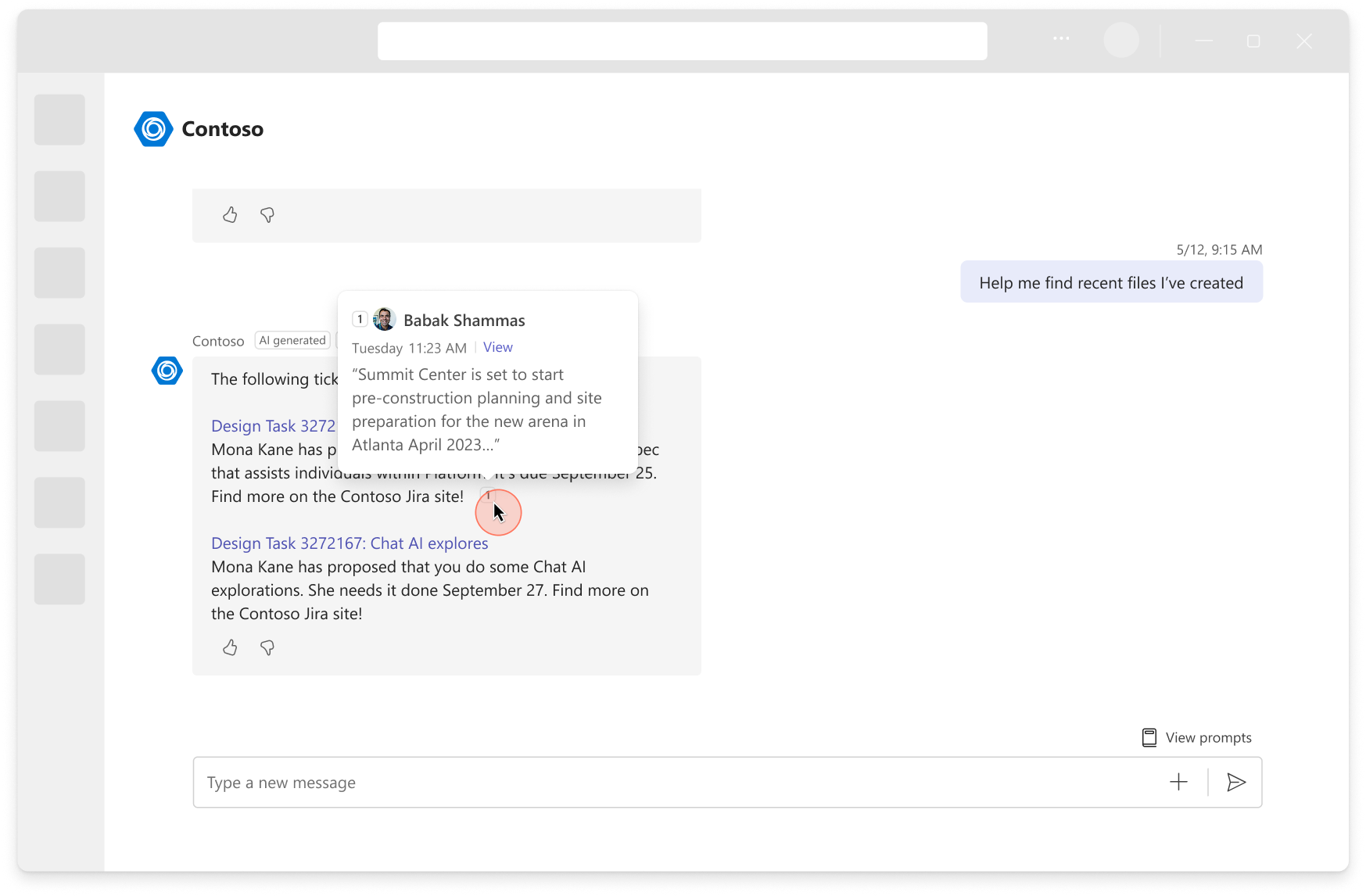

Citations

Citations are references to the sources of information used by the agent to generate its responses. These citations help to ensure transparency, credibility, and trustworthiness in agent interactions.

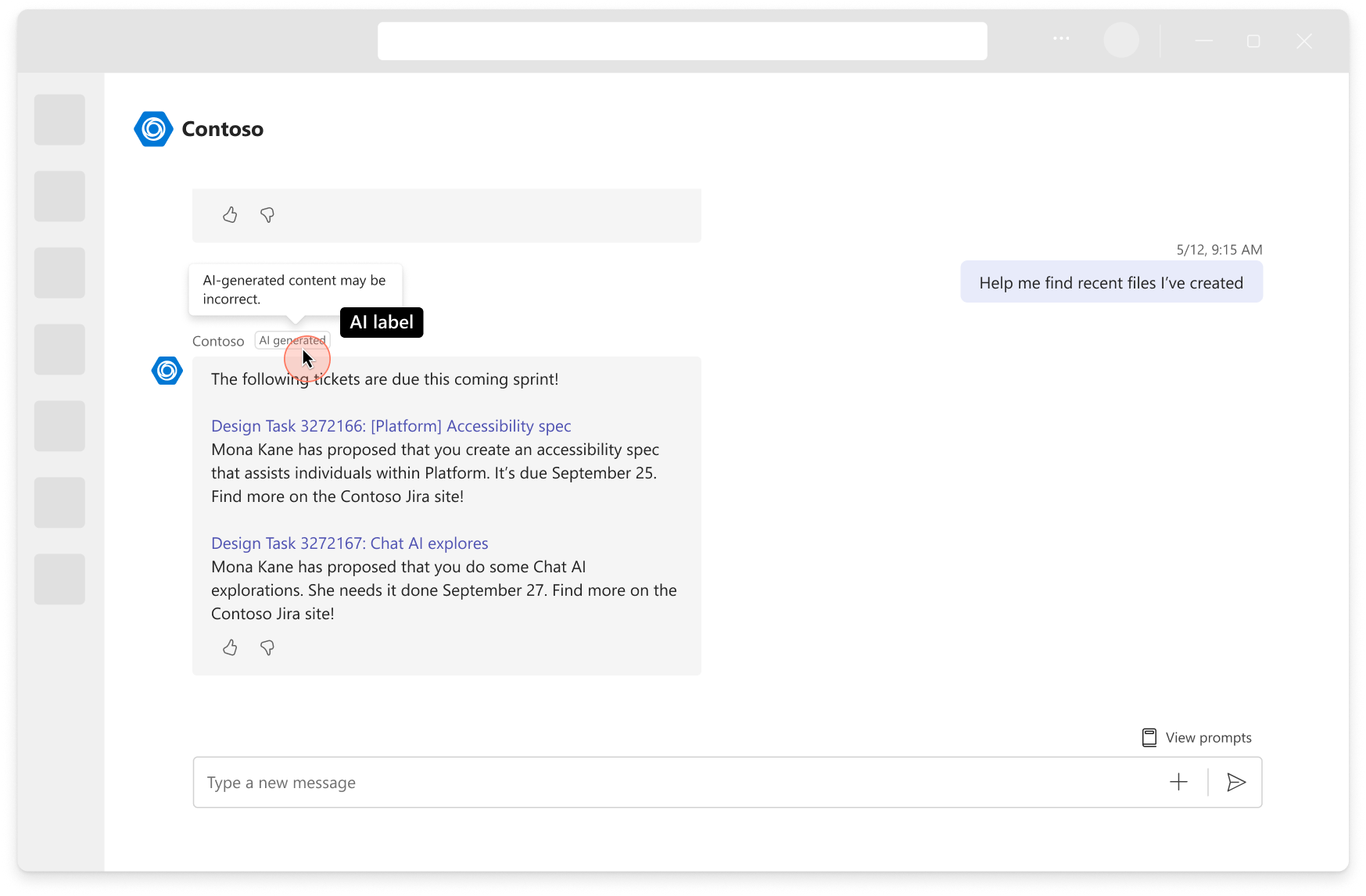

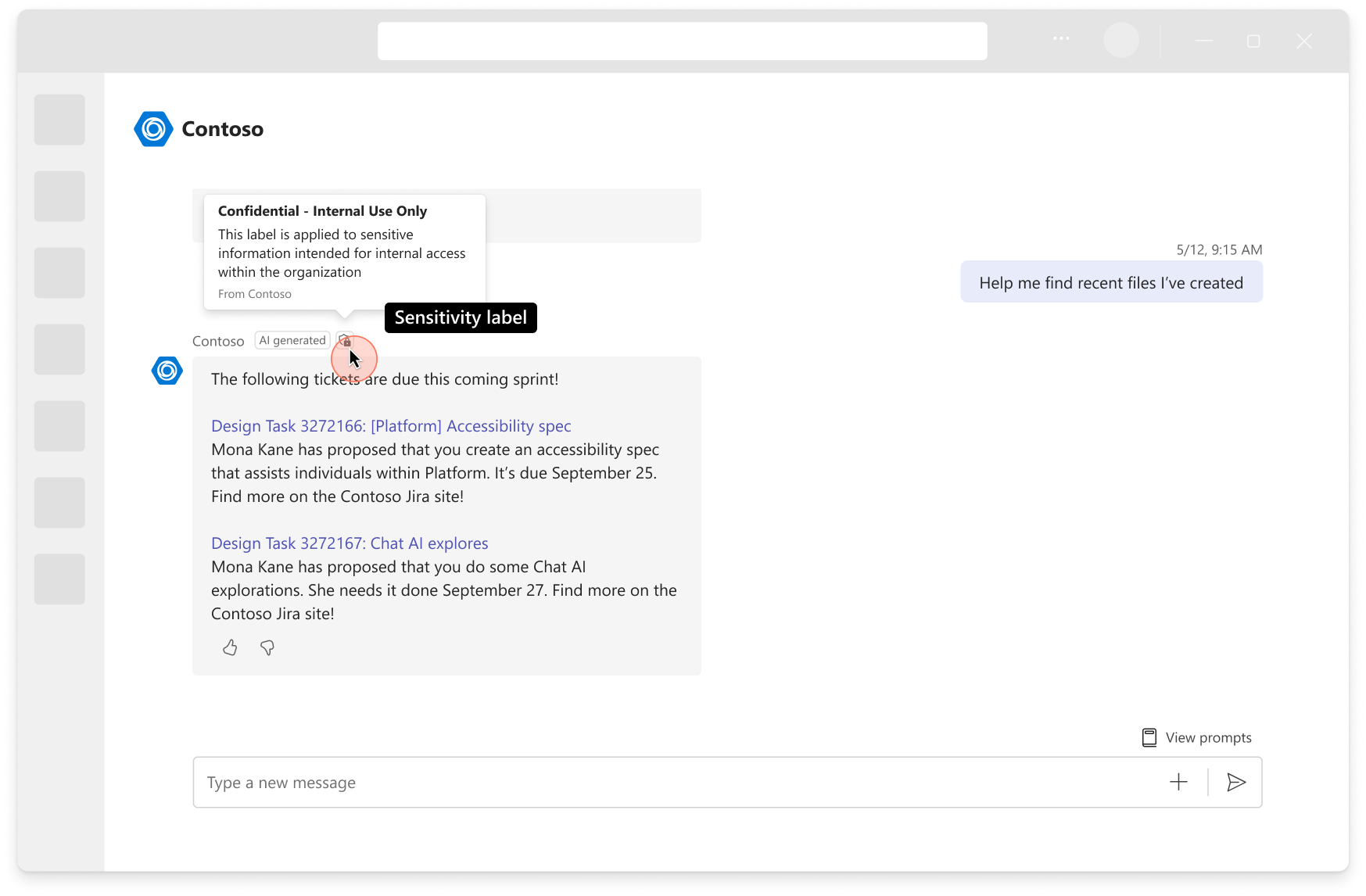

AI and Sensitivity Labels

AI labels indicate that a response is generated by AI. This transparency helps users distinguish between AI-generated and human-generated content and fosters trust in agent responses.

Sensitivity labels mark responses or content that contains confidential or sensitive information. This helps maintain proper data handling and helps to ensure that sensitive information is protected. Labels can be customized to reflect different levels of confidentiality, such as Confidential or Highly Confidential, and are important for compliance and security.

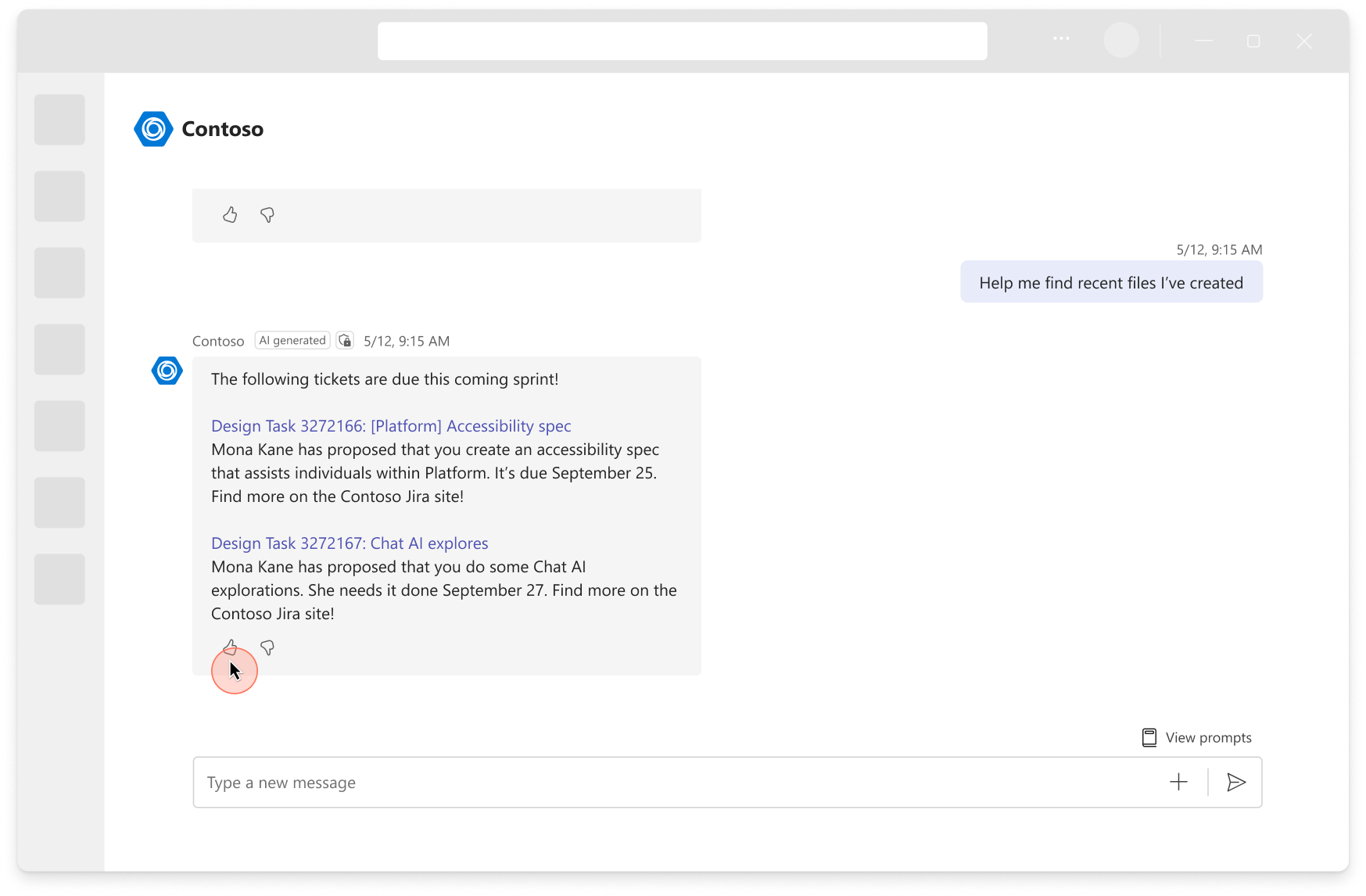

Feedback loop

Feedback loops are mechanisms that allow users to provide developers feedback on an agent's responses. This feedback helps refine and improve the agent's performance over time to help it become more accurate and useful.