Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Use the following sections to identify the Microsoft Purview capabilities that are supported for AI interactions with agents built in Microsoft Copilot Studio, and some get started recommendations for you to manage these AI interactions for security and compliance.

Capabilities supported

Use the following table to see at a glance the Microsoft Purview capabilities that are supported with agents built in Copilot Studio.

| Capability or solution in Microsoft Purview | Supported for AI interactions |

|---|---|

| DSPM for AI | ✓ |

| Auditing | ✓ |

| Data classification | ✓ |

| Sensitivity labels | ✓ |

| Encryption without sensitivity labels | ✕ |

| Data loss prevention | ✓ |

| Insider Risk Management | ✓ |

| Communication compliance | ✓ |

| eDiscovery | ✓ |

| Data Lifecycle Management | ✓ |

| Compliance Manager | ✓ |

Data Security Posture Management for AI

Use Microsoft Purview Data Security Posture Management (DSPM) for AI as your front door to discover, secure, and apply compliance controls for AI usage across your enterprise. This solution uses existing controls from Microsoft Purview information protection and compliance management with easy-to-use graphical tools and reports to quickly gain insights into AI use within your organization. With personalized recommendations, one-click policies help you protect your data and comply with regulatory requirements.

For more information, see Learn about Data Security Posture Management (DSPM) for AI.

AI app-specific information:

Recommendation Get guided assistance to AI regulations, which uses control-mapping regulatory templates from Compliance Manager.

One-click policies available:

- DSPM for AI - Detect risky AI usage from the recommendation Detect risky interactions in AI apps.

- DSPM for AI - Unethical behavior in AI apps from the recommendation Detect unethical behavior in AI apps

- DSPM for AI - Detect sensitive info shared with AI via network from the recommendation Extend insights into sensitive data in AI app interactions.

Auditing and AI interactions

Microsoft Purview Audit solutions provide comprehensive tools for searching and managing audit records of activities performed across various Microsoft services by users and admins, and help organizations to effectively respond to security events, forensic investigations, internal investigations, and compliance obligations.

Like other activities, prompts and responses are captured in the unified audit log. Events include how and when users interact with the AI app, and can include in which Microsoft 365 service the activity took place, and references to the files stored in Microsoft 365 that were accessed during the interaction. If these files have a sensitivity label applied, that's also captured.

These events flow into activity explorer in Data Security Posture Management for AI, where the data from prompts and responses can be displayed. You can also use the Audit solution from the Microsoft Purview portal to search and find these auditing events.

For more information, see Audit logs for Copilot and AI activities.

Data classification and AI interactions

Microsoft Purview data classification provides a comprehensive framework for identifying and tagging sensitive data across various Microsoft services, including Office 365, Dynamics 365, and Azure. Classifying data is often the first step to ensure compliance with data protection regulations and safeguard against unauthorized access, alteration, or destruction. You can use built-in system classifications or create your own.

Sensitive information types and trainable classifiers can be used to find sensitive data in user prompts and responses when they use AI apps. The resulting information then surfaces in the data classification dashboard and activity explorer in Data Security Posture Management for AI.

Sensitivity labels and AI interactions

AI apps that Microsoft Purview support use existing controls to ensure that data stored in your tenant is never returned to the user or used by a large language model (LLM) if the user doesn't have access to that data. When the data has sensitivity labels from your organization applied to the content, there's an extra layer of protection:

When a file is open in Word, Excel, PowerPoint, or similarly an email or calendar event is open in Outlook, the sensitivity of the data is displayed to users in the app with the label name and content markings (such as header or footer text) that have been configured for the label. Loop components and pages also support the same sensitivity labels.

When the sensitivity label applies encryption, users must have the EXTRACT usage right, as well as VIEW, for the AI apps to return the data.

This protection extends to data stored outside your Microsoft 365 tenant when it's open in an Office app (data in use). For example, local storage, network shares, and cloud storage.

Tip

If you haven't already, we recommend you enable sensitivity labels for SharePoint and OneDrive and also familiarize yourself with the file types and label configurations that these services can process. When sensitivity labels aren't enabled for these services, the encrypted files that Copilot and agents can access are limited to data in use from Office apps on Windows.

For instructions, see Enable sensitivity labels for Office files in SharePoint and OneDrive.

If you're not already using sensitivity labels, see Get started with sensitivity labels.

AI app-specific information:

- Supported when the knowledge source is SharePoint (includes OneDrive), and Dataverse with auto-labeling policies for the Data Map.

- Encryption is supported only with sensitivity labels, and only for the SharePoint knowledge source.

- Like sensitivity labels in Microsoft 365 Chat, agents built in Copilot Studio display the sensitivity label for items listed in the response and citations. Using the sensitivity labels' priority number that's defined in the Microsoft Purview portal, the latest response in Copilot displays the highest priority sensitivity label from the data used for that Copilot chat.

- Also like sensitivity label inheritance for Microsoft 365 Copilot, newly created content from Word and PowerPoint inherits the highest priority sensitivity label.

Data loss prevention and AI interactions

Microsoft Purview Data Loss Prevention (DLP) helps you identify sensitive items across Microsoft 365 services and endpoints, monitor them, and helps protect against leakage of those items. It uses deep content inspection and contextual analysis to identify sensitive items and it enforces policies to protect sensitive data such as financial records, health information, or intellectual property.

Windows computers that are onboarded to Microsoft Purview can be configured for Endpoint data loss prevention (DLP) policies that warn or block users from sharing sensitive information with third-party generative AI sites that are accessed via a browser. For example, a user is prevented from pasting credit card numbers into ChatGPT, or they see a warning that they can override. For more information about the supported DLP actions and which platforms support them, see the first two rows in the table from Endpoint activities you can monitor and take action on.

Additionally, a DLP policy scoped to an AI location can restrict AI apps from processing sensitive content. For example, a DLP policy can restrict Microsoft 365 Copilot from summarizing files based on sensitivity labels such as "Highly Confidential". After turning on this policy, Microsoft 365 Copilot and agents won't summarize files labeled "Highly Confidential" but can reference it with a link so the user can then open and view the content using Word. For more information that includes which AI apps support this DLP configuration, see Learn about the Microsoft 365 Copilot policy location.

AI app-specific information:

The following Endpoint DLP capability is supported only:

- When the knowledge source is SharePoint, a DLP policy scoped to the Microsoft 365 Copilot location can restrict agents built in Copilot Studio for Microsoft Teams, SharePoint, and Microsoft 365 Copilot from processing sensitive content based on a specified sensitivity label.

Insider Risk Management and AI interactions

Microsoft Purview Insider Risk Management helps you detect, investigate, and mitigate internal risks such as IP theft, data leakage, and security violations. It leverages machine learning models and various signals from Microsoft 365 and third-party indicators to identify potential malicious or inadvertent insider activities. The solution includes privacy controls like pseudonymization and role-based access, ensuring user-level privacy while enabling risk analysts to take appropriate actions.

Use the Risky AI usage policy template to detect risky usage that includes prompt injection attacks and accessing protected materials. Insights from these signals are integrated into Microsoft Defender XDR to provide a comprehensive view of AI-related risks.

Communication compliance and AI interactions

Microsoft Purview Communication Compliance provides tools to help you detect and manage regulatory compliance and business conduct violations across various communication channels, which include user prompts and responses for AI apps. It's designed with privacy by default, pseudonymizing usernames and incorporating role-based access controls. The solution helps identify and remediate inappropriate communications, such as sharing sensitive information, harassment, threats, and adult content.

To learn more about using communication compliance policies for AI apps, see Configure a communication compliance policy to detect for generative AI interactions.

eDiscovery and AI interactions

Microsoft Purview eDiscovery lets you identify and deliver electronic information that can be used as evidence in legal cases. The eDiscovery tools in Microsoft Purview support searching for content in Exchange Online, OneDrive for Business, SharePoint Online, Microsoft Teams, Microsoft 365 Groups, and Viva Engage teams. You can then prevent the information from deletion and export the information.

Because user prompts and responses for AI apps are stored in a user's mailbox, you can create a case and use search when a user's mailbox is selected as the source for a search query. For example, select and retrieve this data from the source mailbox by selecting from the query builder Add condition > Type > Contains any of > Edit > Copilot activity. This query condition includes all Copilot and other AI application activity.

After the search is refined, you can export the results or add to a review set. You can review and export information directly from the review set.

To learn more about identifying and deleting user AI interaction data, see Search for and delete Copilot data in eDiscovery.

Data Lifecycle Management and AI interactions

Microsoft Purview Data Lifecycle Management provides tools and capabilities to manage the lifecycle of organizational data by retaining necessary content and deleting unnecessary content. These tools ensure compliance with business, legal, and regulatory requirements.

Use retention policies to automatically retain or delete user prompts and responses for AI apps. For detailed information about this retention works, see Learn about retention for Copilot & AI apps.

As with all retention policies and holds, if more than one policy for the same location applies to a user, the principles of retention resolve any conflicts. For example, the data is retained for the longest duration of all the applied retention policies or eDiscovery holds.

AI app-specific information:

- For retention policies, select the option for Microsoft Copilot Experiences.

Compliance Manager and AI interactions

Microsoft Purview Compliance Manager is a solution that helps you automatically assess and manage compliance across your multicloud environment. Compliance Manager can help you throughout your compliance journey, from taking inventory of your data protection risks to managing the complexities of implementing controls, staying current with regulations and certifications, and reporting to auditors.

To help you keep compliant with AI regulations, Compliance Manager provides regulatory templates to help you assess, implement, and strengthen your compliance requirements for all generative AI apps. For example, monitoring AI interactions and preventing data loss in AI applications. For more information, see Assessments for AI regulations.

Getting started recommended steps

Use the following steps to get started with managing data security & compliance for interactions from agents built in Microsoft Copilot Studio.

Because Data Security Posture Management for AI is your front door for securing and managing AI interactions, most of the following instructions use that solution:

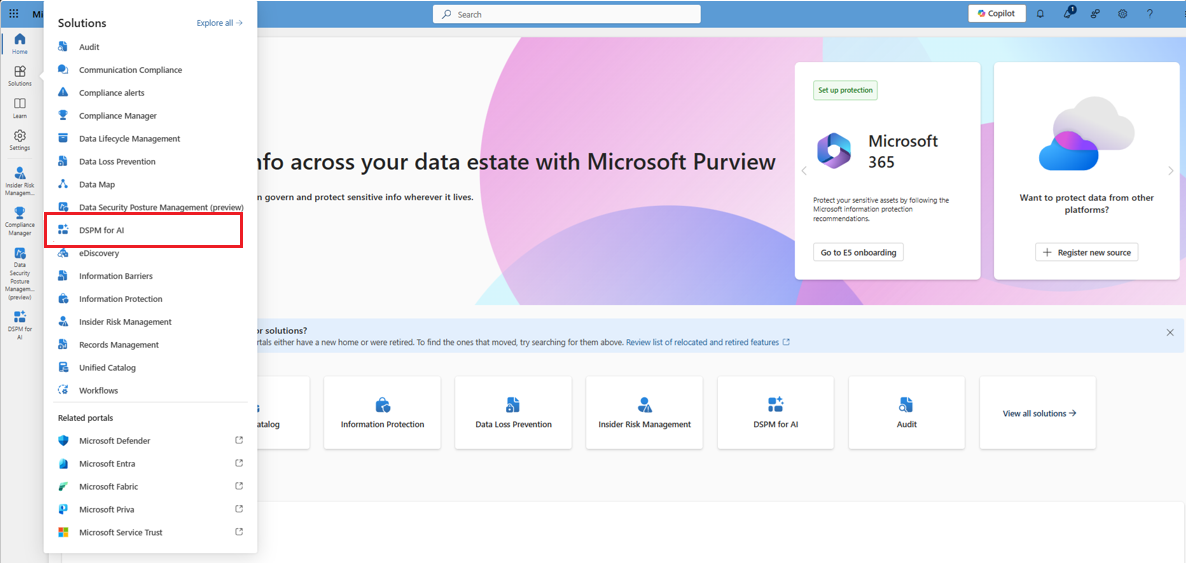

Sign in to the Microsoft Purview portal > Solutions > DSPM for AI with an account that has appropriate permissions. For example, an account that's a member of the Microsoft Entra Compliance Administrator group role.

Discover potential security risks in Microsoft Copilot Studio interactions

From DSPM for AI > Overview, in the Get Started section, look to see if auditing is on for your tenant. If not, select Activate Microsoft Purview Audit.

In the Recommendations section, select View all recommendations to take you to the Recommendations page. If the following recommendation has a status of Not Started, take action on it to create a one-click policy:

- Detect risky interactions in AI apps: This recommendation creates a policy to help calculate user risk by detecting risky prompts and responses in Microsoft 365 Copilot, agents, and other generative AI apps.

Wait at least a day for data, and then navigate to the Reports page to view the results of your policy. Select Copilot experiences & agents and view information such as:

- Total interactions over time (Microsoft Copilot and agents)

- Sensitive interactions per AI app

- Top unethical AI interactions

- Insider Risk severity

- Insider risk severity per AI app

- Potential risky AI usage

Select View details for each of the report graphs to view detailed activities in the activity explorer.

From the filters, select the AI app category of Copilot experiences & agents, and then use the other filters if you need to further refine the displayed data. Then drill down to each activity to view details that include displaying the prompts and response when you're a member of the Microsoft Purview Content Explorer Content Viewer role group. For more information about this requirement, see Permissions for Data Security Posture Management for AI.

Apply compliance controls to Microsoft Copilot Studio

From DSPM for AI > Recommendations page, under Not Started, locate, and select and take actions on the following recommendation:

- Detect unethical behavior in AI: This recommendation creates a policy to detect sensitive information in prompts and responses in agents built in Copilot Studio, and other generative AI apps.

If you need to ensure that interactions from agents built in Copilot Studio are retained for compliance reasons:

In the Microsoft Purview portal, navigate to Data Lifecycle Management > Policies > Retention Policies and create a retention policy to retain Microsoft Security Copilot interactions by selecting the location Microsoft Copilot Experiences and specify the required retention period. For more information, see Create and configure retention policies.

If you need to preserve, collect, analyze, review, or export Microsoft Copilot Studio interactions:

In the Microsoft Purview portal, navigate to eDiscovery > Cases > Create case. In the case, create a search and use the ItemClass property and the

IPM.SkypeTeams.Message.Copilot.Studio.*value to search for these interactions in your organization.

Routinely review the reports and data risk assessments in DSPM for AI to determine if you need to make changes, and use activity explorer and events for deeper analysis of how users are interacting with agents built in Copilot Studio.