Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Activities are the building blocks that help you create end-to-end data workflows in Microsoft Fabric. Think of them as the tasks that move and transform your data to meet your business needs. You might use a copy activity to move data from SQL Server to Azure Blob Storage. Then you could add a Dataflow activity or Notebook activity to process and transform that data before loading it into Azure Synapse Analytics for reporting.

Activities are grouped together in pipelines to accomplish specific goals. For example, you might create a pipeline that:

- Pulls in log data from different sources

- Cleans and organizes that data

- Runs analytics to find insights

Grouping your activities into a pipeline lets you manage all these steps as one unit instead of handling each activity separately. You can deploy and schedule the entire pipeline at once, to run whenever you need it.

Microsoft Fabric offers three types of activities:

- Data movement activities - Move data between systems

- Data transformation activities - Process and transform your data

- Control flow activities - Manage how your pipeline runs

Data movement activities

The copy activity moves data from one location to another. You can copy data from any supported source to any supported destination. Fabric supports many different data stores - check out the Connector overview to see what's available.

For more information, see How to copy data using the copy activity.

Data transformation activities

These activities help you process and transform your data. You can use them individually or chain them together with other activities.

For more information, see the data transformation activities article.

| Data transformation activity | Compute environment |

|---|---|

| Copy data | Compute manager by Microsoft Fabric |

| Dataflow Gen2 | Compute manager by Microsoft Fabric |

| Delete data | Compute manager by Microsoft Fabric |

| Fabric Notebook | Apache Spark clusters managed by Microsoft Fabric |

| HDInsight activity | Apache Spark clusters managed by Microsoft Fabric |

| Spark Job Definition | Apache Spark clusters managed by Microsoft Fabric |

| Stored Procedure | Azure SQL, Azure Synapse Analytics, or SQL Server |

| SQL script | Azure SQL, Azure Synapse Analytics, or SQL Server |

Control flow activities

These activities help you control how your pipeline runs:

| Control activity | Description |

|---|---|

| Append variable | Add a value to an existing array variable. |

| Azure Batch activity | Runs an Azure Batch script. |

| Azure Databricks activity | Runs an Azure Databricks job (Notebook, Jar, Python). |

| Azure Machine Learning activity | Runs an Azure Machine Learning job. |

| Deactivate activity | Deactivates another activity. |

| Fail | Cause pipeline execution to fail with a customized error message and error code. |

| Filter | Apply a filter expression to an input array. |

| ForEach | ForEach Activity defines a repeating control flow in your pipeline. This activity is used to iterate over a collection and executes specified activities in a loop. The loop implementation of this activity is similar to the Foreach looping structure in programming languages. |

| Functions activity | Executes an Azure Function. |

| Get metadata | GetMetadata activity can be used to retrieve metadata of any data in a Data Factory or Synapse pipeline. |

| If condition | The If Condition can be used to branch based on condition that evaluates to true or false. The If Condition activity provides the same functionality that an if statement provides in programming languages. It evaluates a set of activities when the condition evaluates to true and another set of activities when the condition evaluates to false. |

| Invoke pipeline | Execute Pipeline activity allows a Data Factory or Synapse pipeline to invoke another pipeline. |

| KQL activity | Executes a KQL script against a Kusto instance. |

| Lookup Activity | Lookup Activity can be used to read or look up a record/ table name/ value from any external source. This output can further be referenced by succeeding activities. |

| Set Variable | Set the value of an existing variable. |

| Switch activity | Implements a switch expression that allows multiple subsequent activities for each potential result of the expression. |

| Teams activity | Posts a message in a Teams channel or group chat. |

| Until activity | Implements Do-Until loop that is similar to Do-Until looping structure in programming languages. It executes a set of activities in a loop until the condition associated with the activity evaluates to true. You can specify a timeout value for the until activity. |

| Wait activity | When you use a Wait activity in a pipeline, the pipeline waits for the specified time before continuing with execution of subsequent activities. |

| Web activity | Web Activity can be used to call a custom REST endpoint from a pipeline. |

| Webhook activity | Using the webhook activity, call an endpoint, and pass a callback URL. The pipeline run waits for the callback to be invoked before proceeding to the next activity. |

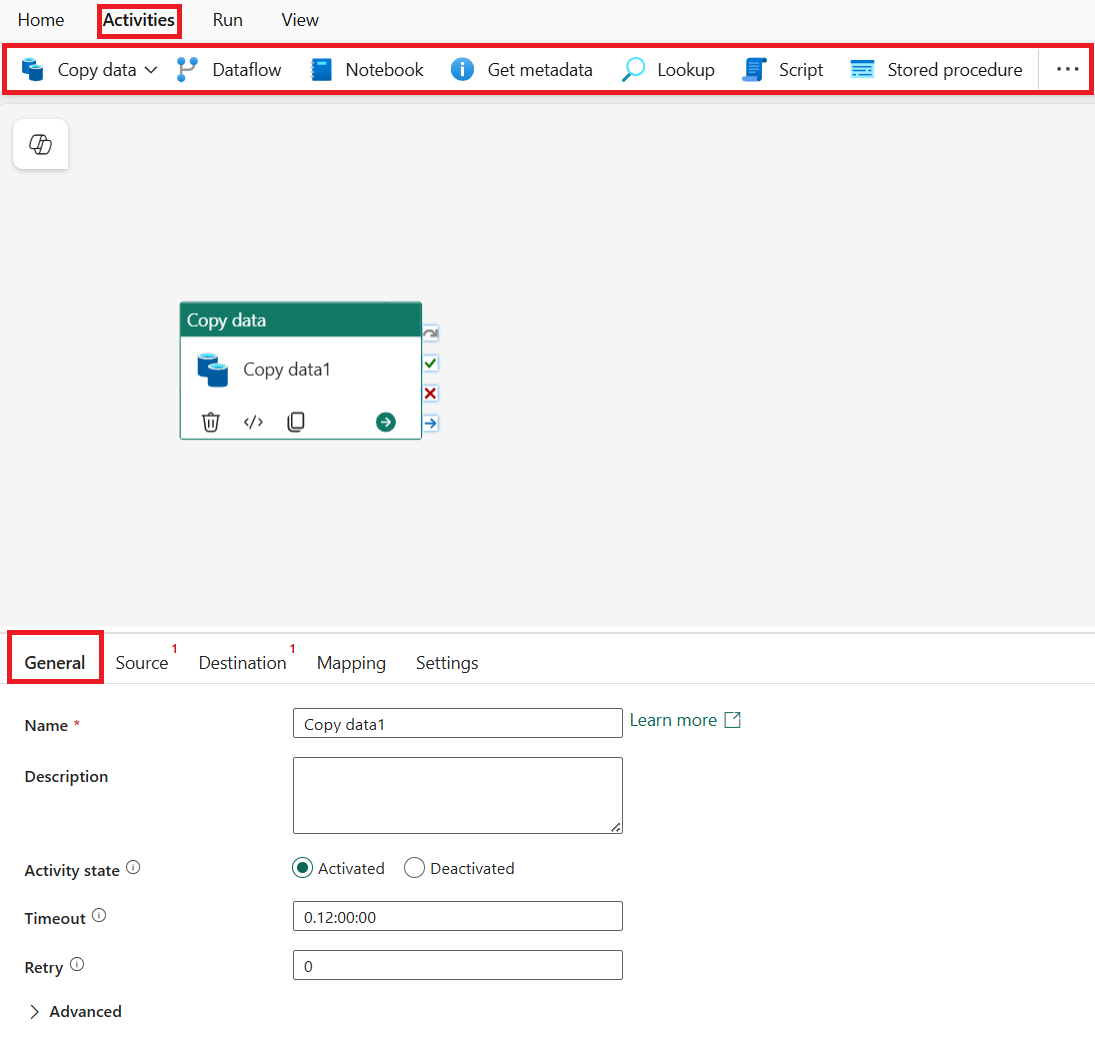

Adding activities to a pipeline with the Microsoft Fabric UI

Here's how to add and configure activities in your pipeline:

- Create a new pipeline in your workspace.

- Go to the Activities tab and browse through the available activities. Scroll right to see all options, then select an activity to add it to the pipeline editor.

- When you add an activity and select it on the canvas, you'll see its General settings in the properties pane below.

- Each activity has other configuration options on other tabs in the properties pane.

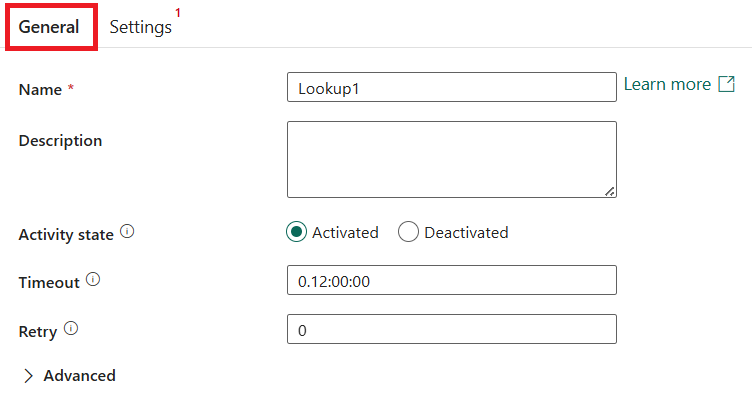

General settings

When you add a new activity to a pipeline and select it, you'll see its properties at the bottom of the screen. These include General, Settings, and sometimes other tabs.

Every activity includes Name and Description fields in the general settings. Some activities also have these options:

| Setting | Description |

|---|---|

| Timeout | How long an activity can run before timing out. The default is 12 hours, and the maximum is seven days. Use the format D.HH:MM:SS. |

| Retry | How many times to retry if the activity fails. |

| (Advanced properties) Retry interval (sec) | How many seconds to wait between retry attempts. |

| (Advanced properties) Secure output | When selected, activity output won't appear in logs. |

| (Advanced properties) Secure input | When selected, activity input won't appear in logs. |

Note

By default, you can have up to 120 activities per pipeline. This includes inner activities for containers.