Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Applies to: ✅ Data Engineering and Data Science in Microsoft Fabric

Get started with Livy API for Fabric Data Engineering by creating a Lakehouse; authenticating with a Microsoft Entra token; discover the Livy API endpoint; submit either batch or session jobs from a remote client to Fabric Spark compute; and monitor the results.

Important

This feature is in preview.

Prerequisites

Fabric Premium or Trial capacity with a LakeHouse

Enable the Tenant Admin Setting for Livy API (preview)

A remote client such as Visual Studio Code with Jupyter notebook support, PySpark, and Microsoft Authentication Library (MSAL) for Python

Either a Microsoft Entra app token. Register an application with the Microsoft identity platform

Or a Microsoft Entra SPN token. Add and manage application credentials in Microsoft Entra ID

Choosing a REST API client

You can use various programming languages or GUI clients to interact with REST API endpoints. In this article, we use Visual Studio Code. Visual Studio Code needs to be configured with Jupyter Notebooks, PySpark, and the Microsoft Authentication Library (MSAL) for Python

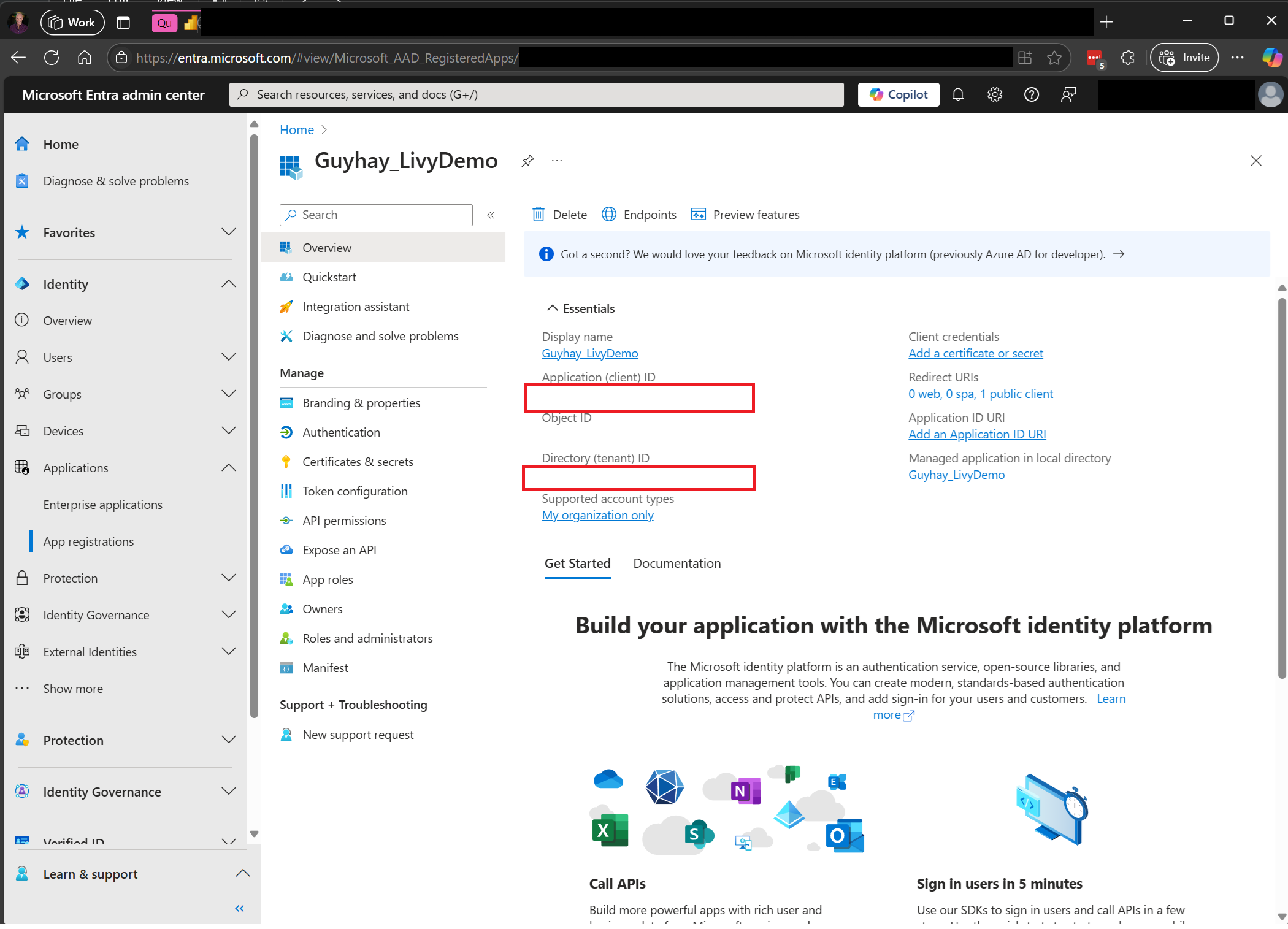

How to authorize the Livy API requests with an Entra SPN Token

To work with Fabric APIs including the Livy API, you first need to create a Microsoft Entra application and create a secret and use that secret in your code. Your application needs to be registered and configured adequately to perform API calls against Fabric. For more information, see Add and manage application credentials in Microsoft Entra ID

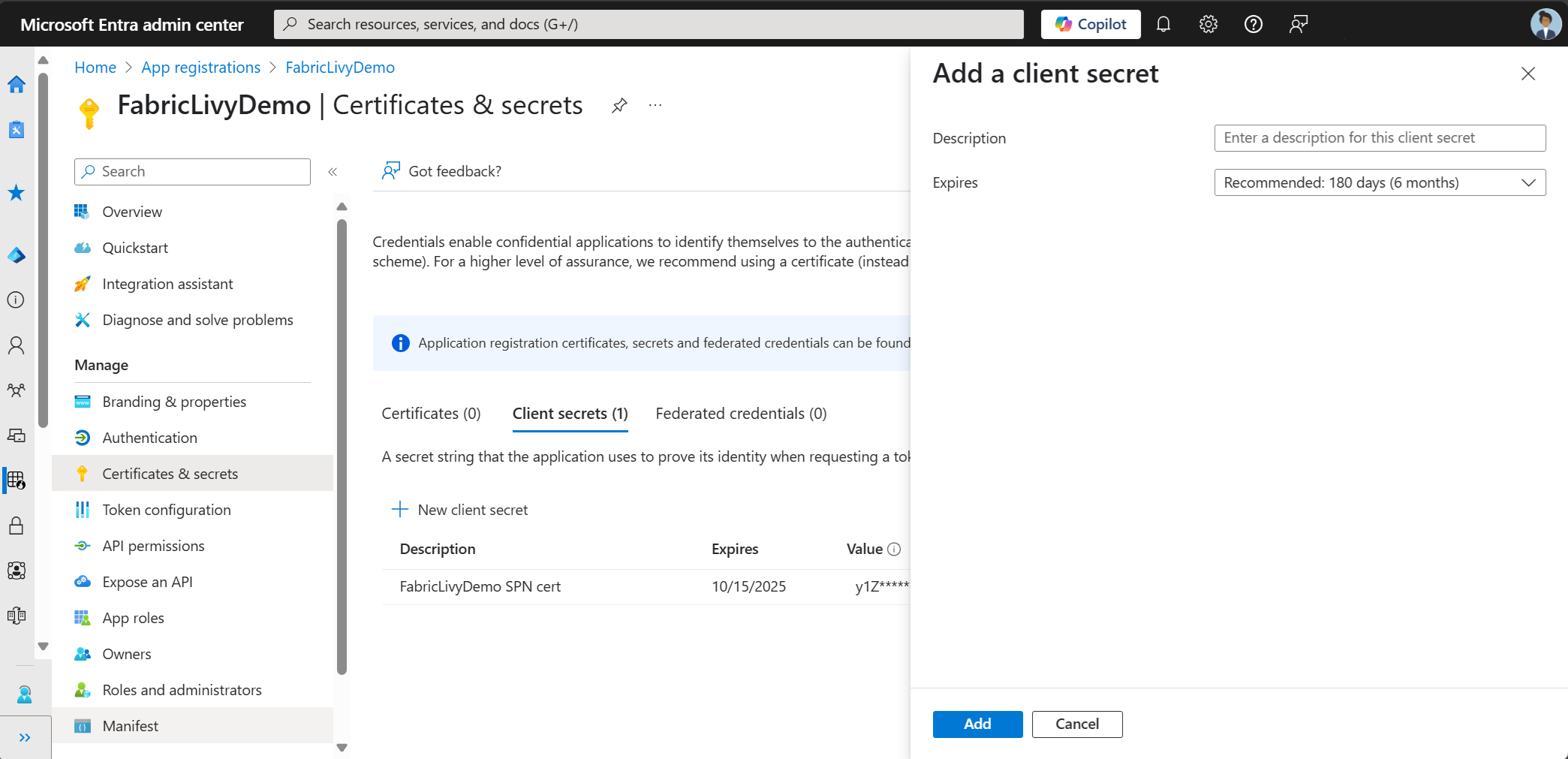

After creating the app registration, create a client secret.

As you create the client secret, make sure to copy the value. You need this later in the code, and the secret can't be seen again. You'll also need the Application (client) ID and the Directory (tenant ID) in addition to the secret in your code.

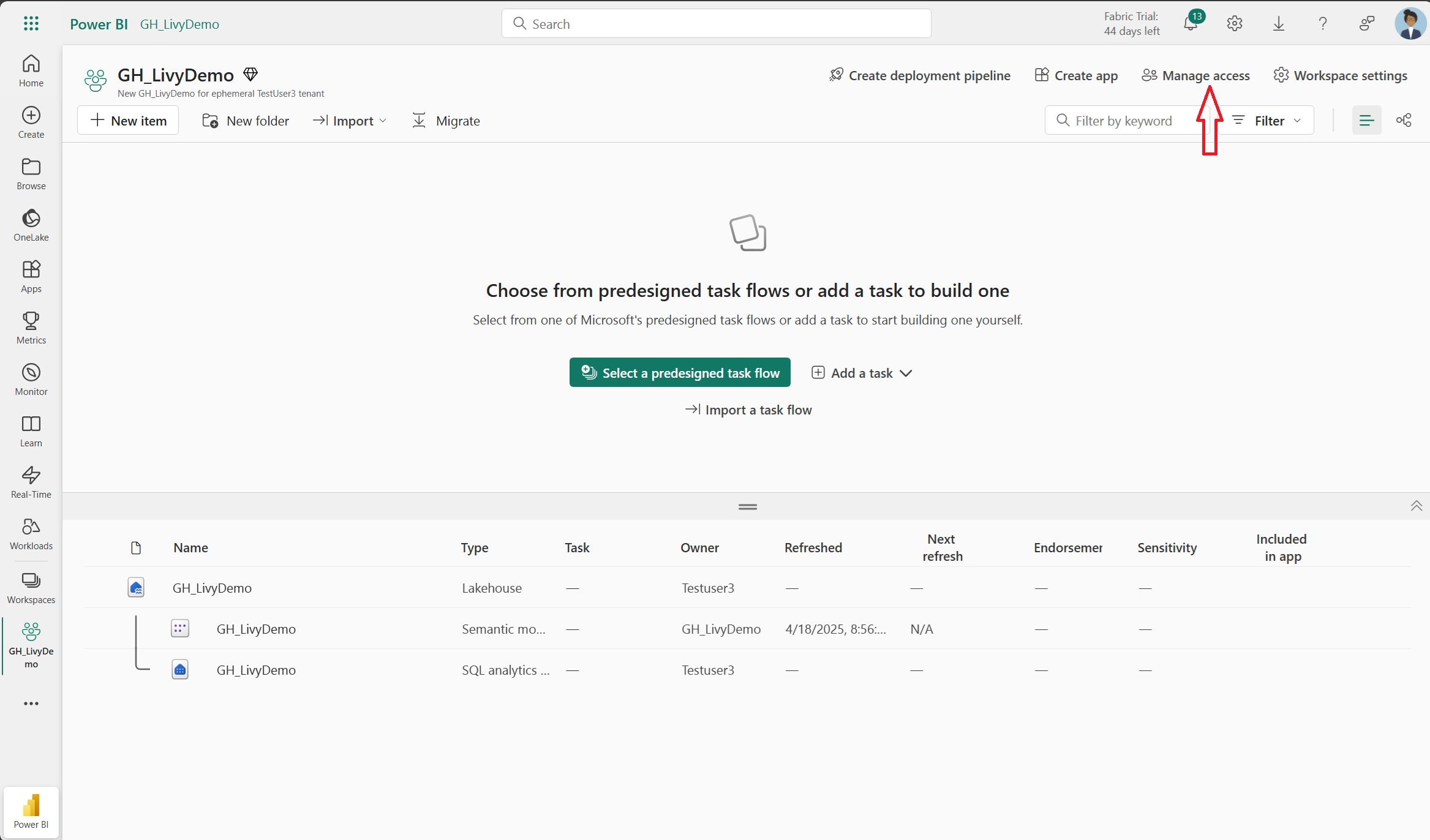

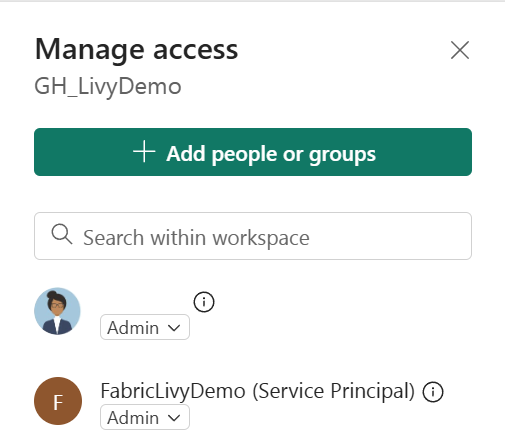

Next we need to add the client secret to our workspace.

Search for the Entra client secret, and add that secret to the workspace, and make sure the newly added secret has Admin permissions.

How to authorize the Livy API requests with an Entra app token

To work with Fabric APIs including the Livy API, you first need to create a Microsoft Entra application and obtain a token. Your application needs to be registered and configured adequately to perform API calls against Fabric. For more information, see Register an application with the Microsoft identity platform.

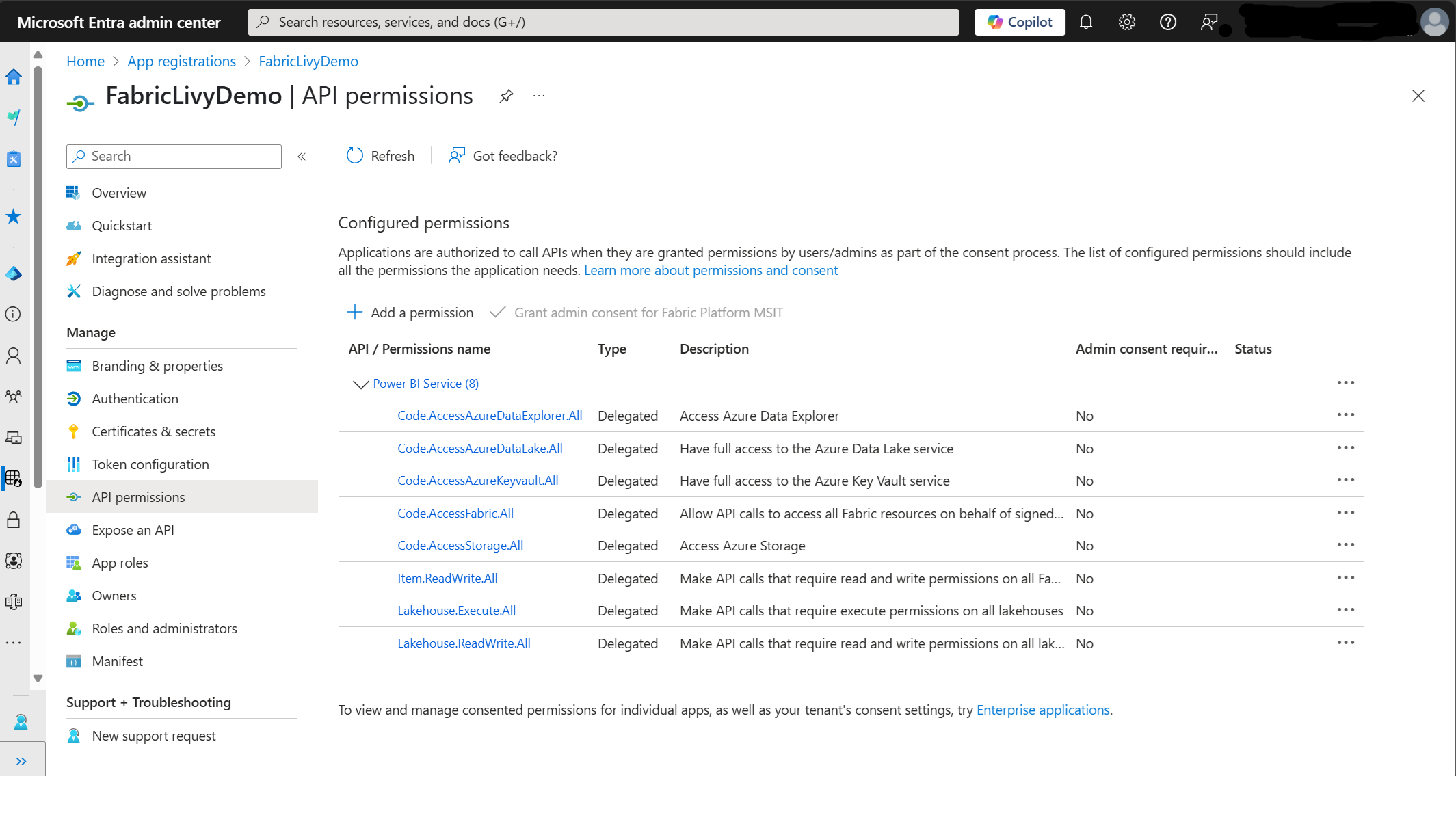

There are many Microsoft Entra scope permissions required to execute Livy jobs. This example uses simple Spark code + storage access + SQL:

Code.AccessAzureDataExplorer.All

Code.AccessAzureDataLake.All

Code.AccessAzureKeyvault.All

Code.AccessFabric.All

Code.AccessStorage.All

Item.ReadWrite.All

Lakehouse.Execute.All

Workspace.ReadWrite.All

Note

During public preview these scopes may change as we add a few more granular scopes. When these scope changes happen your Livy app may break. Check this list as it will be updated with the additional scopes.

Some customers want more granular permissions than the prior list. You could remove Item.ReadWrite.All and replacing with these more granular scope permissions:

- Code.AccessAzureDataExplorer.All

- Code.AccessAzureDataLake.All

- Code.AccessAzureKeyvault.All

- Code.AccessFabric.All

- Code.AccessStorage.All

- Lakehouse.Execute.All

- Lakehouse.ReadWrite.All

- Workspace.ReadWrite.All

- Notebook.ReadWrite.All

- SparkJobDefinition.ReadWrite.All

- MLModel.ReadWrite.All

- MLExperiment.ReadWrite.All

- Dataset.ReadWrite.All

When you register your application, you will need both the Application (client) ID and the Directory (tenant) ID.

The authenticated user calling the Livy API needs to be a workspace member where both the API and data source items are located with a Contributor role. For more information, see Give users access to workspaces.

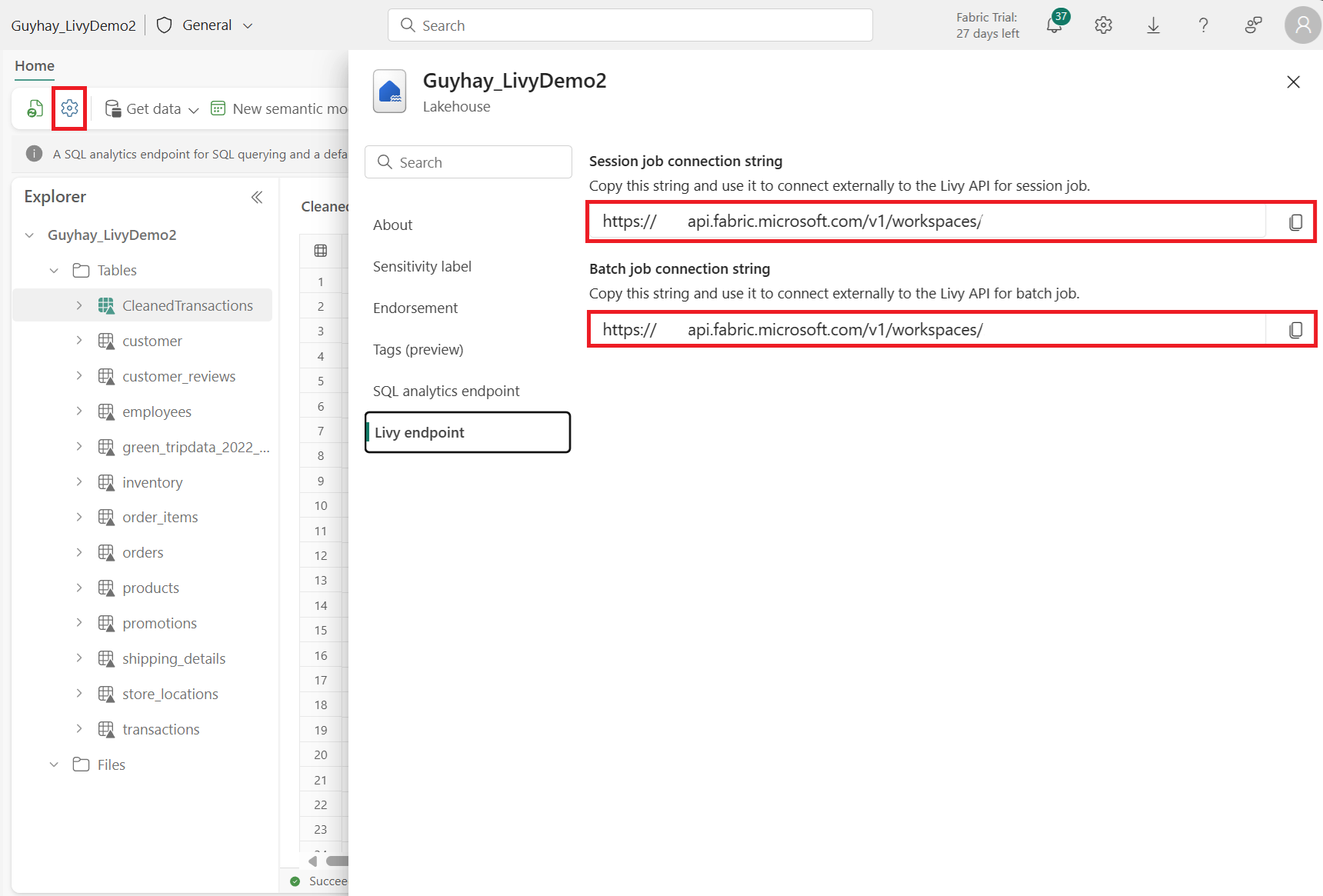

How to discover the Fabric Livy API endpoint

A Lakehouse artifact is required to access the Livy endpoint. Once the Lakehouse is created, the Livy API endpoint can be located within the settings panel.

The endpoint of the Livy API would follow this pattern:

https://api.fabric.microsoft.com/v1/workspaces/><ws_id>/lakehouses/<lakehouse_id>/livyapi/versions/2023-12-01/

The URL is appended with either <sessions> or <batches> depending on what you choose.

Download the Livy API Swagger files

The full swagger files for the Livy API are available here.

Submit a Livy API jobs

Now that setup of the Livy API is complete, you can choose to submit either batch or session jobs.

Integration with Fabric Environments

By default, this Livy API session runs against the default starter pool for the workspace. Alternatively you can use Fabric Environments Create, configure, and use an environment in Microsoft Fabric to customize the Spark pool that the Livy API session uses for these Spark jobs.

To use a Fabric Environment in a Livy Spark session, simply update the json to include this payload.

create_livy_session = requests.post(livy_base_url, headers = headers, json={

"conf" : {

"spark.fabric.environmentDetails" : "{\"id\" : \""EnvironmentID""}"}

}

)

To use a Fabric Environment in a Livy Spark batch session, simply update the json payload as shown below.

payload_data = {

"name":"livybatchdemo_with"+ newlakehouseName,

"file":"abfss://YourABFSPathToYourPayload.py",

"conf": {

"spark.targetLakehouse": "Fabric_LakehouseID",

"spark.fabric.environmentDetails" : "{\"id\" : \""EnvironmentID"\"}" # remove this line to use starter pools instead of an environment, replace "EnvironmentID" with your environment ID

}

}

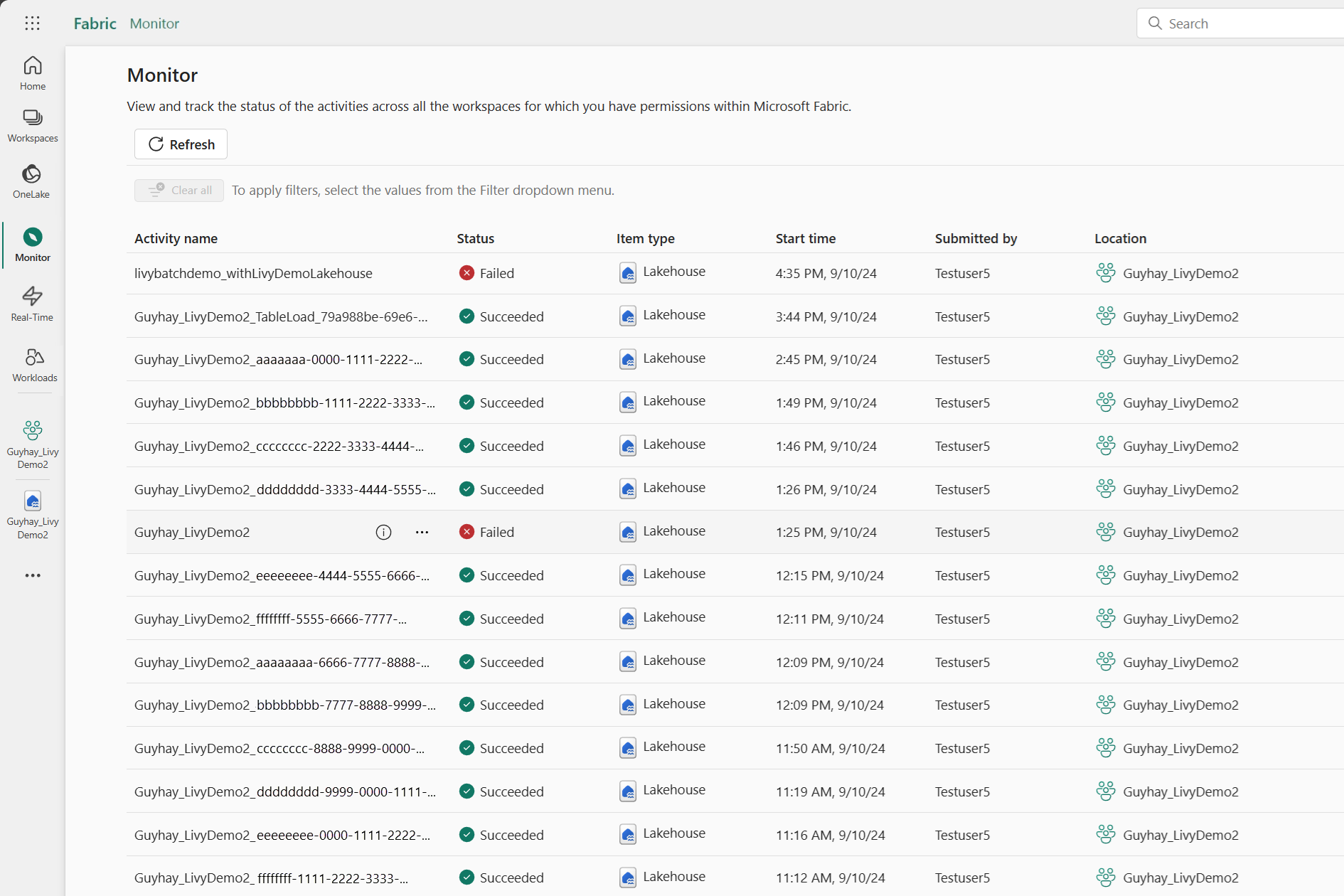

How to monitor the request history

You can use the Monitoring Hub to see your prior Livy API submissions, and debug any submissions errors.

Related content

- Apache Livy REST API documentation

- Get Started with Admin settings for your Fabric Capacity

- Apache Spark workspace administration settings in Microsoft Fabric

- Register an application with the Microsoft identity platform

- Microsoft Entra permission and consent overview

- Fabric REST API Scopes

- Apache Spark monitoring overview

- Apache Spark application detail