Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This section describes using built-in monitoring and observability features for Lakeflow Declarative Pipelines in the Azure Databricks user interface. These features support tasks such as:

- Observing the progress and status of pipeline updates. See What pipeline details are available in the UI?.

- Alerting on pipeline events such as the success or failure of pipeline updates. See Add email notifications for pipeline events.

- Viewing metrics for streaming sources like Apache Kafka and Auto Loader (Public Preview). See View streaming metrics.

Add email notifications for pipeline events

You can configure one or more email addresses to receive notifications when the following occurs:

- A pipeline update completes successfully.

- A pipeline update fails, either with a retryable or a non-retryable error. Select this option to receive a notification for all pipeline failures.

- A pipeline update fails with a non-retryable (fatal) error. Select this option to receive a notification only when a non-retryable error occurs.

- A single data flow fails.

To configure email notifications when you create or edit a pipeline:

- Click Add notification.

- Enter one or more email addresses to receive notifications.

- Select the checkbox for each notification type to send to the configured email addresses.

- Click Add notification.

Note

Create custom responses to events, including notifications or custom handling, by using Python event hooks.

Viewing pipelines in the UI

Find your Lakeflow Declarative Pipelines from the Jobs & Pipelines option in the workspace sidebar. This opens the Jobs & pipelines page, where you can view information about each job and pipeline you have access to. Click the name of a pipeline to open the pipeline details page.

Note

To access the event log in the new Lakeflow Pipelines Editor (Beta), navigate to the Issues and Insights panel at the bottom of the editor, click View logs, or the Open in logs button next to any error. For more details, see Lakeflow Pipelines Editor, and Pipeline setting for event log.

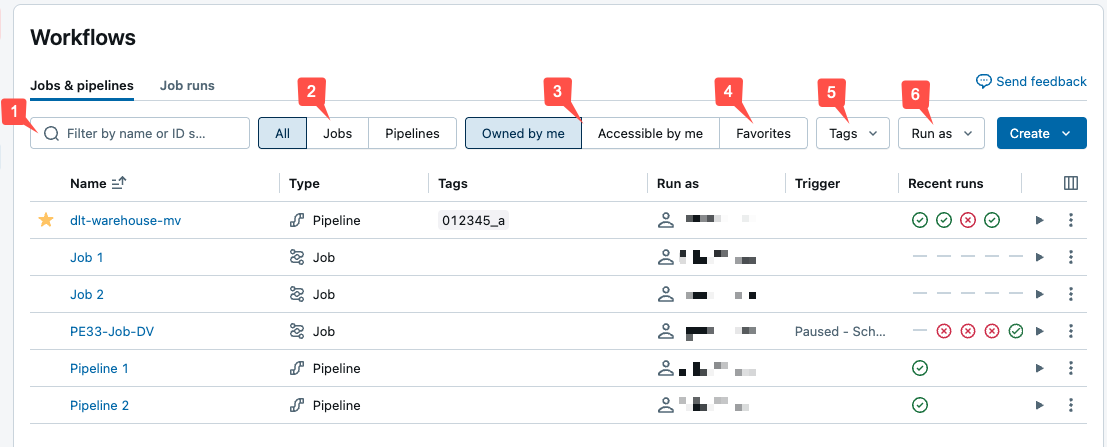

Using the Jobs & pipelines list

To view the list of pipelines you have access to, click Jobs & Pipelines in the sidebar. The Jobs & pipelines tab lists information about all available jobs and pipelines, such as the creator, the trigger (if any), and the result of the last five runs.

To change the columns displayed in the list, click and select or deselect columns.

Important

The unified Jobs & pipelines list is in Public Preview. You can disable the feature and return to the default experience by disabling Jobs and pipelines: Unified management, search, & filtering. See Manage Azure Databricks Previews for more information.

You can filter jobs in the Jobs & pipelines list as shown in the following screenshot.

- Text search: keyword search is supported for the Name and ID fields. To search for a tag created with a key and value, you can search by the key, the value, or both the key and value. For example, for a tag with the key

departmentand the valuefinance, you can search fordepartmentorfinanceto find matching jobs. To search by the key and value, enter the key and value separated by a colon (for example,department:finance). - Type: filter by Jobs, Pipelines, or All. If you select Pipelines you can also filter by Pipeline type, which includes ETL and Ingestion pipelines.

- Owner: show only the jobs you own.

- Favorites: show jobs you have marked as favorites.

- Tags: Use tags. To search by tag you can use the tags drop-down menu to filter for up to five tags at the same time or directly use the keyword search.

- Run as: Filter by up to two

run asvalues.

To start a job or a pipeline, click the play button. To stop a job or a pipeline, click the

stop button. To access other actions, click the kebab menu

. For example, you can delete the job or pipeline, or access settings for a pipeline from that menu.

What pipeline details are available in the UI?

The pipeline graph appears as soon as an update to a pipeline has successfully started. Arrows represent dependencies between data sets in your pipeline. By default, the pipeline details page shows the most recent update for the table, but you can select older updates from a drop-down menu.

Details include the pipeline ID, source code, compute cost, product edition, and the channel configured for the pipeline.

To see a tabular view of data sets, click the List tab. The List view allows you to see all data sets in your pipeline represented as a row in a table and is useful when your pipeline DAG is too large to visualize in the Graph view. You can control the data sets displayed in the table using multiple filters such as data set name, type, and status. To switch back to the DAG visualization, click Graph.

The Run as user is the pipeline owner, and pipeline updates run with this user's permissions. To change the run as user, click Permissions and change the pipeline owner.

How can you view data set details?

Clicking on a data set in the pipeline graph or data set list shows details about the data set. Details include the data set schema, data quality metrics, and a link to the source code defining the data set.

View update history

To view the history and status of pipeline updates, click the update history drop-down menu in the top bar.

Select the update in the drop-down menu to view the graph, details, and events for an update. To return to the latest update, click Show the latest update.

View streaming metrics

Important

Streaming observability for Lakeflow Declarative Pipelines is in Public Preview.

You can view streaming metrics from the data sources supported by Spark Structured Streaming, like Apache Kafka, Amazon Kinesis, Auto Loader, and Delta tables, for each streaming flow in your Lakeflow Declarative Pipelines. Metrics are displayed as charts in the Lakeflow Declarative Pipelines UI's right pane and include backlog seconds, backlog bytes, backlog records, and backlog files. The charts display the maximum value aggregated by minute and a tooltip shows maximum values when you hover over the chart. The data is limited to the last 48 hours from the current time.

Tables in your pipeline with streaming metrics available display the ![]() icon when viewing the pipeline DAG in the UI Graph view. To view the streaming metrics, click the

icon when viewing the pipeline DAG in the UI Graph view. To view the streaming metrics, click the ![]() to display the streaming metric chart in the Flows tab in the right pane. You can also apply a filter to view only tables with streaming metrics by clicking List and then clicking Has streaming metrics.

to display the streaming metric chart in the Flows tab in the right pane. You can also apply a filter to view only tables with streaming metrics by clicking List and then clicking Has streaming metrics.

Each streaming source supports only specific metrics. Metrics not supported by a streaming source are not available to view in the UI. The following table shows the metrics available for supported streaming sources:

| source | backlog bytes | backlog records | backlog seconds | backlog files |

|---|---|---|---|---|

| Kafka | ✓ | ✓ | ||

| Kinesis | ✓ | ✓ | ||

| Delta | ✓ | ✓ | ||

| Auto Loader | ✓ | ✓ | ||

| Google Pub/Sub | ✓ | ✓ |