Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Introduction

In this article explain step by step how to create a Azure Databricks Workspace. Azure Databricks is an Apache Spark-based analytics platform optimized for the Microsoft Azure cloud services platform.

In order to create a Azure databricks workspace similar to other resources, we need to login to the Azure portal using https://portal.azure.com

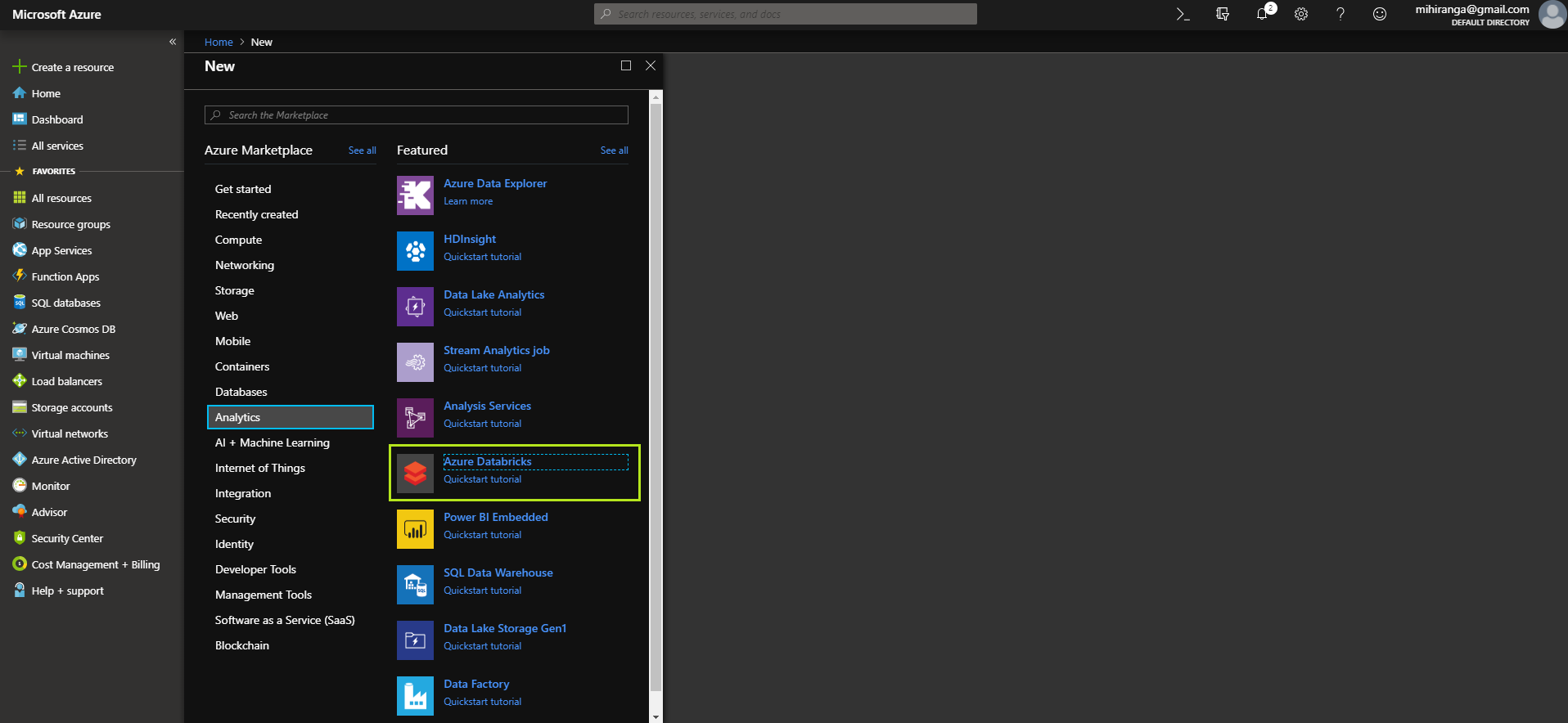

Create a new resource button and select Analytics tab, then Azure Databricks as in the image below.

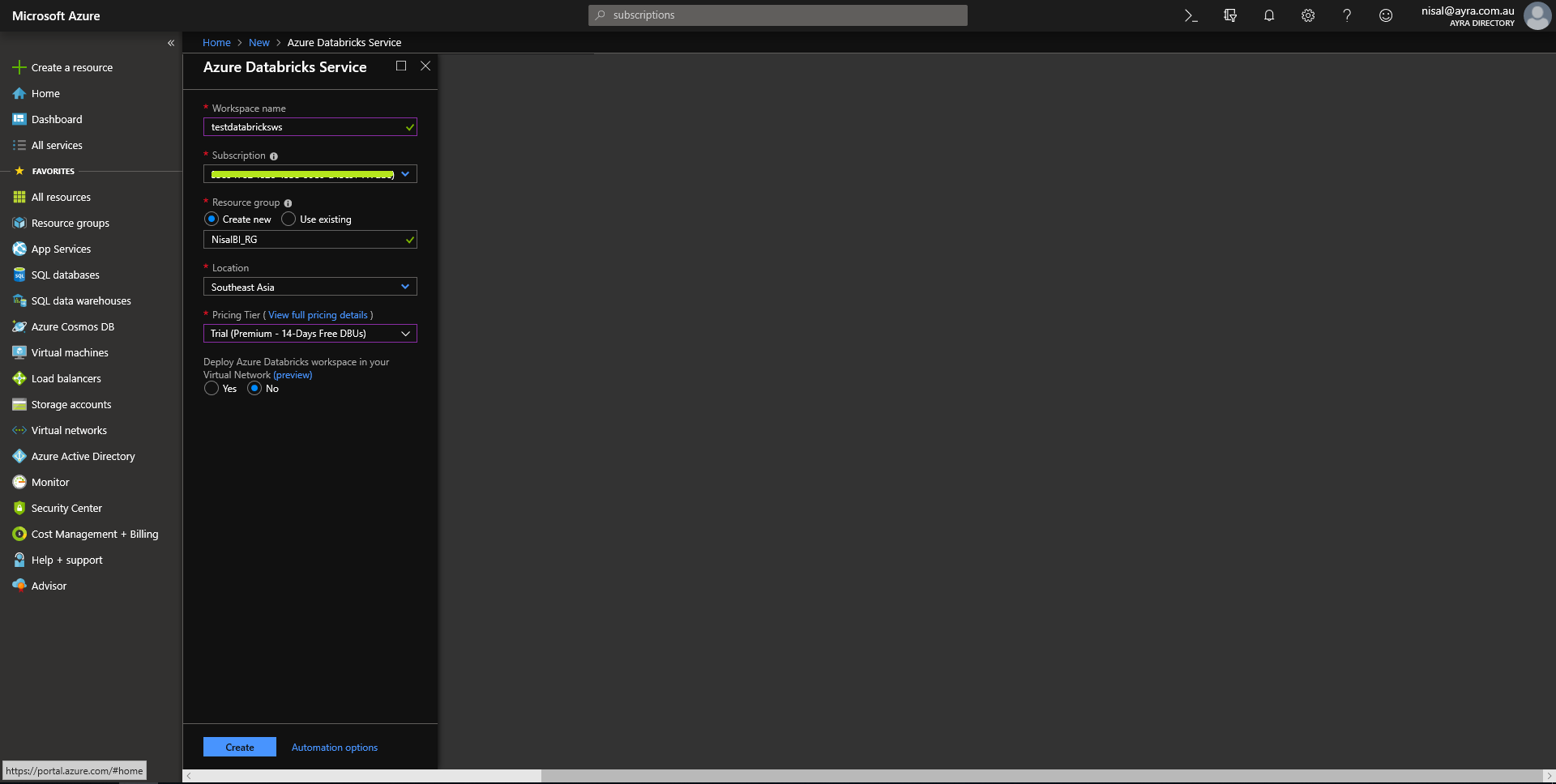

Then provide the necessary details for databricks service as below.

In this case we’ve used Workspace name as 'testdatabricksws'

Then select the Subscription which is going to utilize for the databricks workspace

Select the resource group or create a one. We’ve already created a resource group

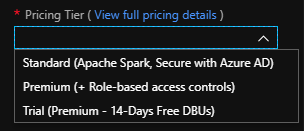

When selecting the pricing tier, we’ve selected the Trial - premium 14 days which allow creating a spark cluster with premium resources. Which also only retain for 14 days. That will be more than enough for a POC. But, when we create a real-world project we may need to stick to either Standard or Premium. See the pricing details from here https://azure.microsoft.com/en-us/pricing/details/databricks/

The final option is to Deploy Azure Databricks workspace in our Virtual Network or not. Which means it enables deploy Azure Databricks workspace resources in our own virtual network. Read more on https://docs.azuredatabricks.net/administration-guide/cloud-configurations/azure/vnet-inject.html

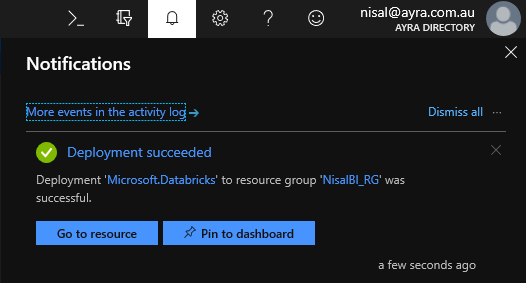

Once we click it will take a few seconds to create the Azure databricks workspace for us. Once it completed, it will notify us like this.

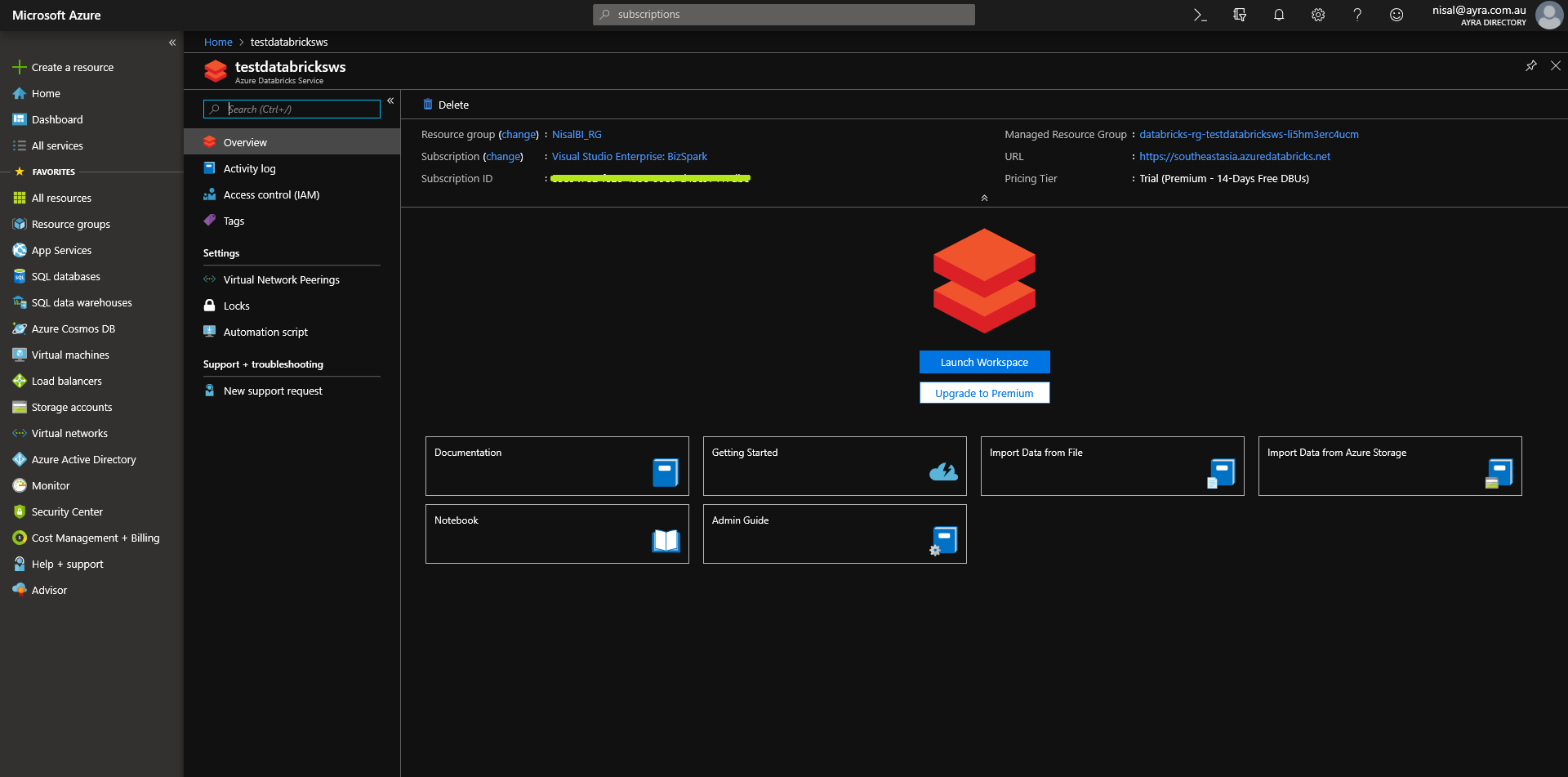

Click the created workspace and it will open the overview tab of the databricks environment.

Click the Launch Workspace button and it will open our Databricks Workspace we created. We may need to sign-in to the Databricks using same Azure account.

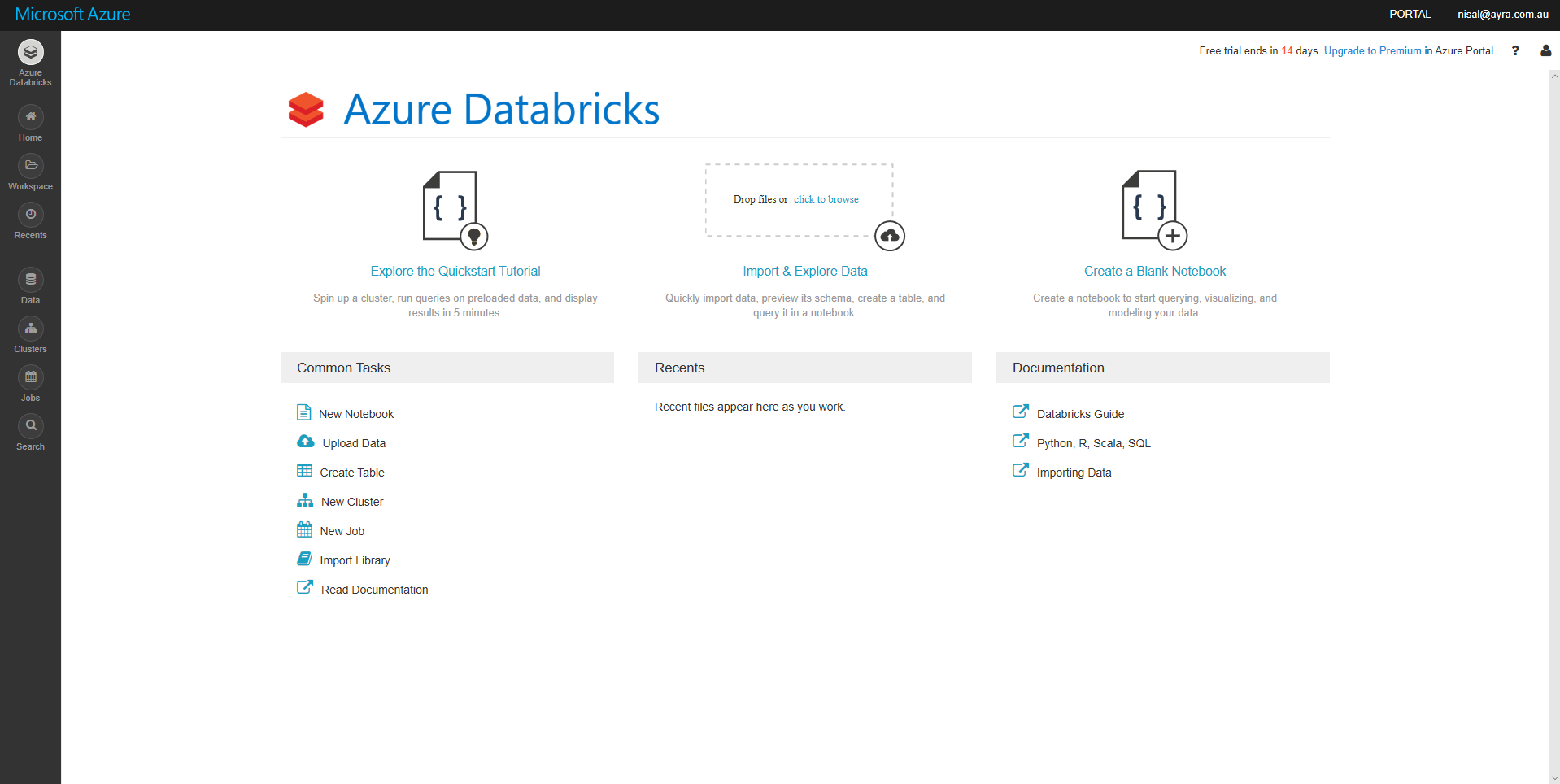

Finally, we have landed to the Azure Databricks workspace. Essentially we can perform many tasks like create spark clusters, define and streamline workflows, create notebooks using Scala, SQL, Python or R and run programs etc.

Will discuss how to create a spark cluster in another article.